An Interest In:

Web News this Week

- April 26, 2024

- April 25, 2024

- April 24, 2024

- April 23, 2024

- April 22, 2024

- April 21, 2024

- April 20, 2024

Parallel incremental FTP deploy in CI pipeline

Automatization of deployment is must-have nowadays. There are several tools that can upload files to FTP. But none of them can upload only changes and do it in parallel. I combined two tools to achieve this, with examples for Gitlab.

V etin si lze lnek pest na kutac.cz

TL;DR Everything described in the article is in the public repository with ready-to-go Docker image and examples for the Gitlab CI pipeline.

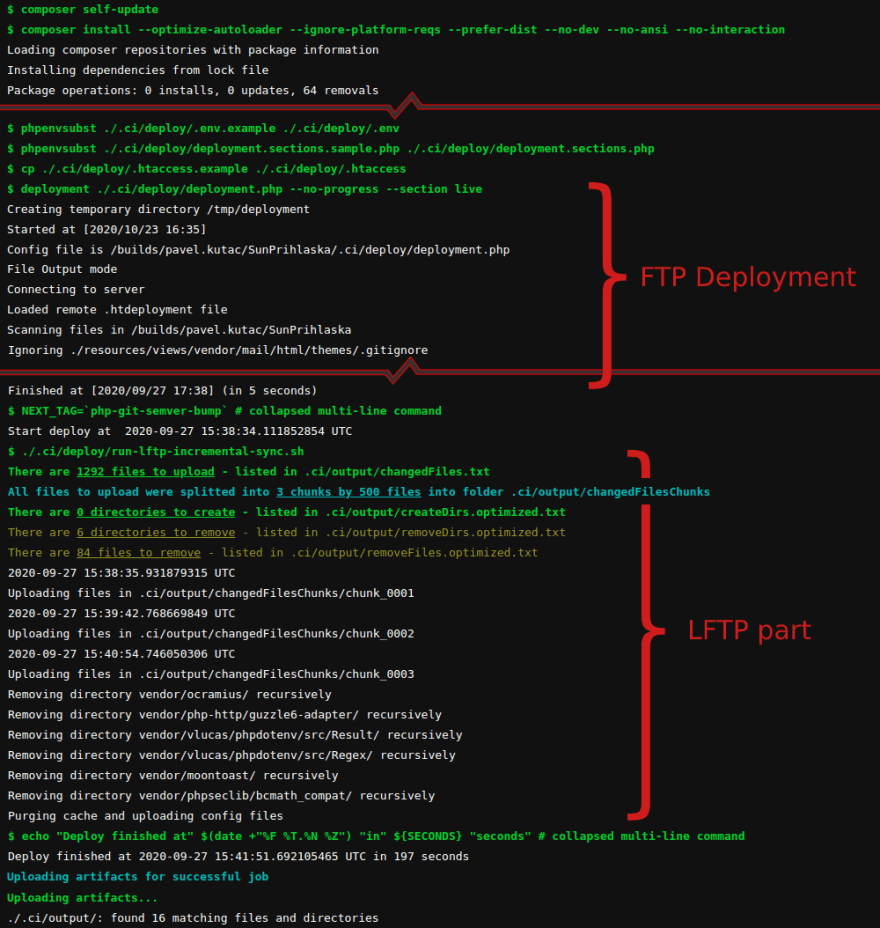

FTP Deployment + LFTP = Parallel incremental upload

Earlier I have forked and modified FTP Deployment tool. Which can compute hashes of all files, compares it with .htdeployment file saved on the server, and creates/uploads/deletes only changed files. However, it cannot work in parallel.

But there is another great and popular tool called LFTP which can do parallel operations. It also supports mirror command, but that cannot be used in many cases. More about that below.

In my project, I used the modified fork of FTP Deployment to track changes and prepare lists of directories and files to upload or delete. The second step consists of Bash scripts consuming lists and executing LFTP commands.

Thanks to the combination of both tools my deploy pipeline works much faster. With many changed files in the vendor folder, I can see time decreasing from 30 minutes to 5 minutes.

See the README and example files to see detailed explanation and ready-to-go config files. And also prepared Docker image which is based on the newest PHP and contains a modified version of FTP Deployment, LFTP, and utilities to replace environment variables and a few more.

Why lftp mirror cannot be always used?

The mirror command of LFTP can synchronize 2 folders, so someone could say that FTP Deployment is superfluous. And in some cases, it could really be true. However, LFTP checks only filesize and file's modification time. If there is a difference between a local file and a file on FTP, the file is synced. But this has many flaws:

- Some FTP servers ignore modification time sent with file and just set current time. Due to this behavior, the file should be synced always.

- If some files are created in the pipeline, their modification time can be different between runs. So they could be always considered as changed. For example vendor folder.

- Both issues above can be solved with

--ignore-timeflag. Then the only filesize is checked when files are compared. But if only 1 character is changed in the file, the file will not be synced, which is not sufficient for production use.

Let me know what do you think about my solution!

Original Link: https://dev.to/arxeiss/parallel-incremental-ftp-deploy-in-ci-pipeline-2511

Dev To

An online community for sharing and discovering great ideas, having debates, and making friends

An online community for sharing and discovering great ideas, having debates, and making friendsMore About this Source Visit Dev To