An Interest In:

Web News this Week

- April 29, 2024

- April 28, 2024

- April 27, 2024

- April 26, 2024

- April 25, 2024

- April 24, 2024

- April 23, 2024

The Tempestuous Tale of Docker: A Sea-Change in the Realm of Computing

Have you ever faced the issue where code works in this environment but doesn't work in the other environment?. What of the code works in a developer environment but the same code doesn't work in the testing or production environment?.

Docker is a tool that helps developers build lightweight and portable software containers that simplify application development, testing, and deployment.

In a nutshell, it is a configuration management tool which is used to automate the deployment of software in lightweight containers. These containers help applications to work efficiently in different environments.

Basically, Docker resolves this issue of an application working in one environment and not working in the other environment. Noticeably, Docker makes it easier than ever for developers to package their software to build once and run anywhere.

In software development lifecycle - SDLC(Plan, Design, Development, Testing/Release, Deployment & Maintenance), docker comes into the picture at the deployment stage.

It makes the process of application deployment very easy and efficient and resolves a lot of issues related to deploying applications.

It is the world's leading software container platform. A software a application is made up of frontend components, Backend workers, Databases environment and libraries dependencies, and we have to ensure all this components work on all different and wide range of platforms.

i.e In the shipping business, a container is used to ship a variety of products such as cars, cookers, liquid gas, oil etc revolutionizing the shipping industry.

Docker is a tool designed to make it easier to deploy and run applications by using containers. Containers allow a developer to package up an application with all the parts it needs, such as libraries and other dependencies, and ship it all out as one package.

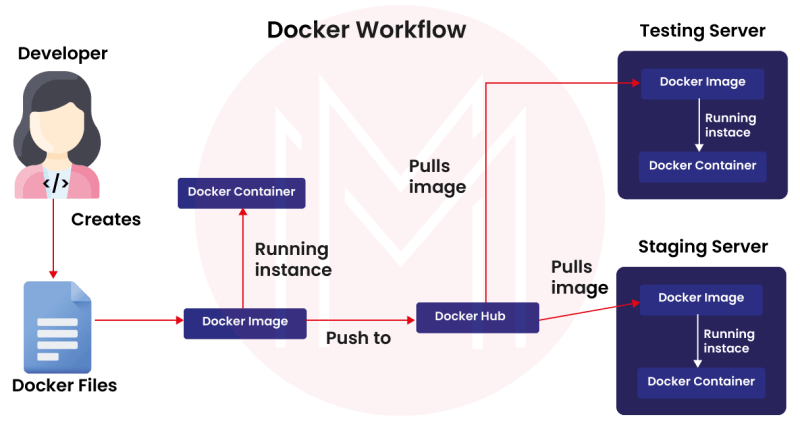

General workflow of docker

A developer describes all the application dependencies and requirements in a file called dockerfile.

This dockerfile describes steps to create a Docker image. It's like a recipe with all ingredients and steps necessary in making your dish. You will have all the applications requirements and it's dependencies in the image, and when you run the image one gets a docker container.

Docker container in a nutshell is the run time instance of the docker image.It will have application with all its

dependencies.

According to Hykes at PyCon talk, containers are,

self-contained units of software you can deliver from a server over there to a server over there, from your laptop to EC2 to a bare-metal giant server, and it will run in the same way because it is isolated at the process level and has its own file system.

The docker image can be stored on an online cloud repository called Docker Hub. One can also store their docker image in their own repository or version control system. This images can be pulled to create containers in any environment

i.e Staging environment and local environment. This resolves the issue of app working on one platform and not on another.

Virtualization Vs Containerization

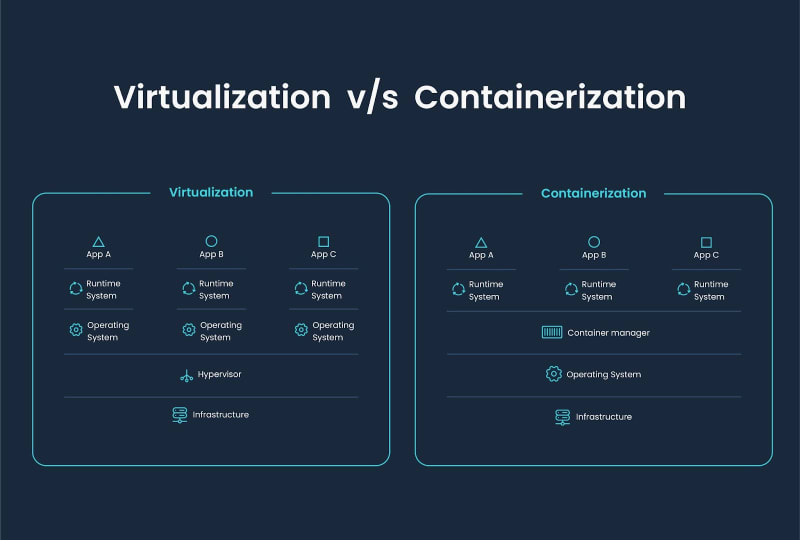

In virtualization, there's a software called Hypervisor, it is used to create and run virtual machines. We can create multiple virtual machines on a host operating system using Hypervisor.

The VM's(Virtual Machines) have there own OS kernel(Operating System) and doesn't use the HOST OS kernel, this results to overheads on the host platform. Also, in case of VM's , we have to allocate fixed resources to every virtual machine

and which don't change as per application needs leading to a lot of wastage on memory and space.

In Containerization, there's a container engine and a single OS(Operating System). We have multiple containers with there respective applications and dependencies which use the host's operating system(OS). Here the resources are not fixed as they are taken as per the needs of the application hence zero overheads, very lightweight and fast.

However, the need of having a VM on the host OS and then having containers at time arises is some cases such as a need to run a Windows OS on a Linux OS. In such cases we need to have a virtual machine first which will have our windows OS.

The container engine is a docker engine.

Docker Architecture

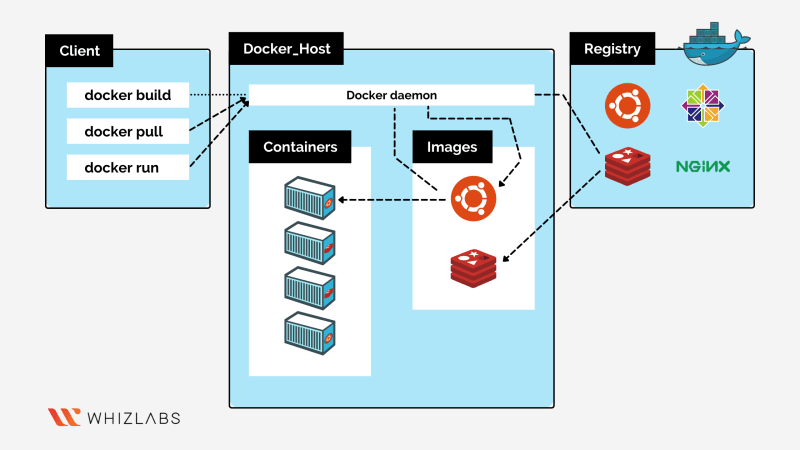

Docker is a novel way to package the tools required to build and launch a container in a more streamlined and simplified way than was previously possible. It comprises Dockerfile, container images, the Docker run utility, Docker Hub, Docker Engine, Docker Compose, and Docker Desktop.

The core of Docker is Docker Engine. This is the underlying client-server technology that creates and runs the containers.

Docker has a client-server architecture. The command line(CL) is the client and the docker daemon is the server which contains docker containers.

The Docker Engine includes a long-running daemon process called dockerd for managing containers, APIs that allow programs to communicate with the Docker daemon, and a command-line interface.

The docker server receives commands from the docker client in the form of API request(s). All the components of docker client and docker server form the DOCKER ENGINE. The daemon(server) receives the commands from the Docker client through CLI or REST API's.

Docker client and daemon can be present on the same host(machine) or different hosts.

Advantages of Docker

- Build an application only once

An application inside a container can run on any system that has Docker installed. So there is no need to build and configure app multiple times on different platforms. Hence, Resolves the problem of the code working on one system and not working on another system.

- Less worry

With Docker you test your application inside a container and ship it inside a container. This means the environment in which you test is identical to the one on which the app will run in production.Docker image can be pulled and used on any environment to create a container that hosts the application.

- Ease orchestration and scaling

Give that docker containers are lightweight, developers can launch lots of them for better scaling of services. These clusters of containers do then need to be orchestrated.

- Portability

Docker containers can run on any platform. It can run on your local system, Amazon ec2, Google Cloud Platform, Rack-space server, VirtualBox etc. A container running on AWS can easily be ported to VirtualBox.

- Version Control

Like Git, Docker has in-built version control system. Docker containers work just like GIT repositories, allowing you to commit changes to your Docker images and version control them.

- Isolation

With Docker every application works in isolation in its own container and does not interfere with other applications running on the same system. So multiple containers can run on same system without interference. For removal also you can simply delete the container and it will not leave behind any files or traces on the system.

- Productivity

Docker allows faster and more efficient deployments without worrying about running your app on different platforms. It increases productivity many folds.

- Composability

Docker containers make it easier for developers to compose the building blocks of an application into a modular unit with easily interchangeable parts, which can speed up development cycles, feature releases, and bug fixes.

You can sign-up on for practice.

Original Link: https://dev.to/bikocodes/the-tempestuous-tale-of-docker-a-sea-change-in-the-realm-of-computing-2i2d

Dev To

An online community for sharing and discovering great ideas, having debates, and making friends

An online community for sharing and discovering great ideas, having debates, and making friendsMore About this Source Visit Dev To