An Interest In:

Web News this Week

- April 27, 2024

- April 26, 2024

- April 25, 2024

- April 24, 2024

- April 23, 2024

- April 22, 2024

- April 21, 2024

How to integrate kafka with nodejs ?

Kafka is a powerful, open-source stream processing platform that has become increasingly popular in recent years for its ability to handle large amounts of data and real-time streaming scenarios. Node.js, on the other hand, is a JavaScript runtime built on Chrome's V8 JavaScript engine, known for its ability to create scalable, high-performance network applications. In this blog post, we'll explore the ways in which Kafka and Node.js can be used together to build efficient, real-time data processing systems that can handle high volumes of data and traffic."

You can also check my weekly posts in Linkedin

Requirements:

- Basic knowledge of nodejs

- Basic Knowledge of docker

- Basic knowledge of javascript

What we will do:

- Set up kafka.

- Set up two Node.js apps: one for the producer and one for the consumer.

- sent message from producer and consume it

- scenario: A user will be automatically created in the consumer when a producer is created.

Discuss some crucial terms:

- producer: a producer is a program that writes data to a Kafka cluster. The producer sends messages to one or more topics.

- consumer: a consumer is a program that reads data from a Kafka cluster.

- borker: A broker is a Kafka server that stores and serves data.

- topic: topics are the channels through which producers and consumers communicate

- partition: a partition is a unit of data storage in a topic. Each topic is divided into one or more partitions

Let's play the game:

To begin, we will install Kafka

Install Docker: Kafka is typically run in a Docker container, so you will need to install Docker on your system if it is not already installed. Follow the instructions for your operating system to install Docker.

we will need two Docker images:

- wurstmeister/zookeeper

- wurstmeister/kafka

1 Create a docker-compose.yml file and include the following in it.

version: "3"

services:

zookeeper:

image: 'wurstmeister/zookeeper:latest'

ports:

- '2181:2181'

environment:

- ALLOW_ANONYMOUS_LOGIN=yes

kafka:

image: 'wurstmeister/kafka:latest'

ports:

- '9092:9092'

environment:

- KAFKA_BROKER_ID=1

- KAFKA_LISTENERS=PLAINTEXT://:9092

- KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://127.0.0.1:9092

- KAFKA_ZOOKEEPER_CONNECT=zookeeper:2181

- ALLOW_PLAINTEXT_LISTENER=yes

depends_on:

- zookeeper

2 Run the following command to start the containers (-d to start the containers in the foreground)

docker-compose up -d3 Start kafka shell:(change kafka_kafka_1 by your container name)

docker exec -it <u>kafka_kafka_1</u> /bin/sh4 Create Topic:

kafka-topics.sh --create --zookeeper zookeeper:2181 --replication-factor 1 --partitions 1 --topic your-topic-name-hereAdditional CLIs:

kafka-topics.sh --list --zookeeper zookeeper:2181: list all topics.

docker ps: to show running containers.

and that's all.

Next step: Set up two Node.js apps

1 create a package.json:

npm init2 install packages:

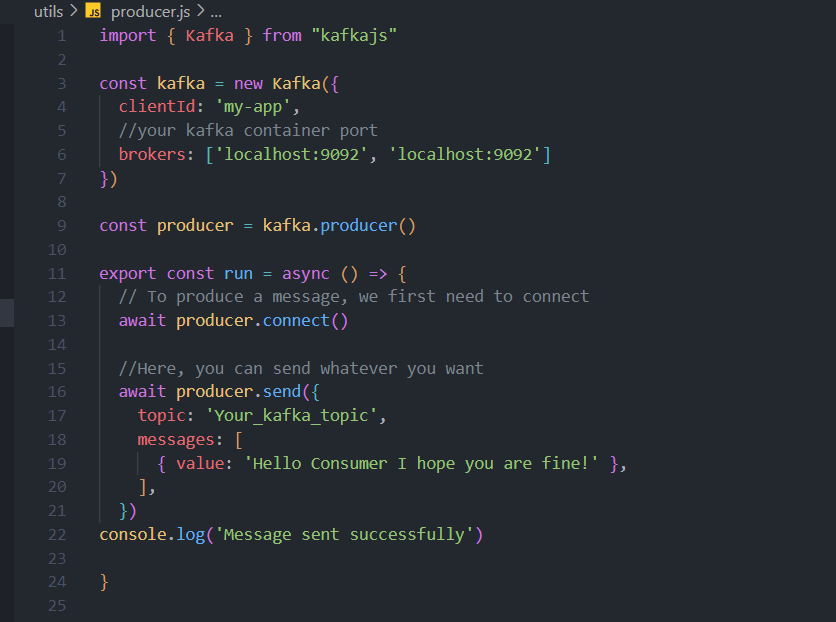

npm i express nodemon dotenv mongoose kafkajs3 Now we will configure our producer:

4 Add the following two lines: (to your index)

the result :

5 configure our consumer by adding this following in it:

6 now we will start our scenario:

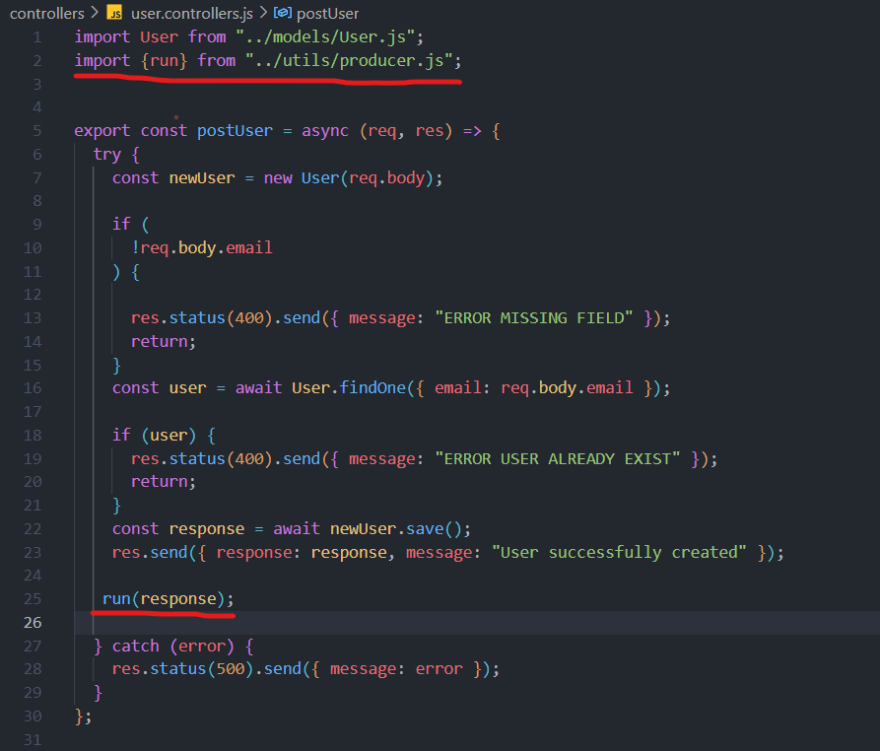

First of all, create your model, controller, and route. Inside the controller, you must add the following two lines:import {run} from "../utils/producer.js";

run(response);

like this example below:

7 update our producer:

8 update our consumer:

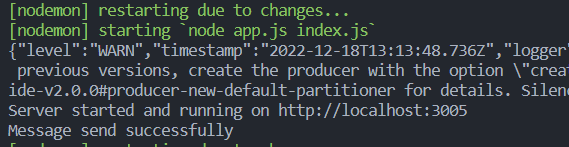

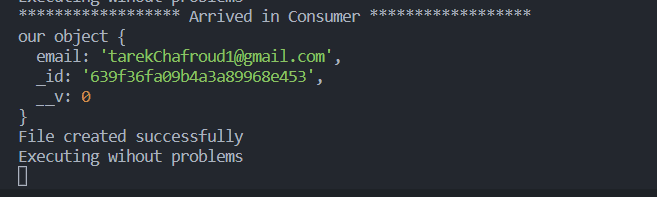

THE RESULT

from producer:

from consumer:

In conclusion, Apache Kafka is a powerful distributed streaming platform that enables real-time data processing and stream processing applications. It can be integrated with Node.js, a popular JavaScript runtime, through the use of Kafka client libraries, allowing developers to build event-driven and data-driven applications using the two technologies together. Kafka is known for its scalability, high performance, and ability to handle high volumes of data, making it a valuable tool for building robust and scalable applications. However, it can also be complex to set up and maintain, and requires a good understanding of its architecture and configuration options in order to use it effectively. Overall, Kafka and Node.js can be a powerful combination for building efficient and scalable applications, and are worth considering for any project that requires real-time data processing or stream processing capabilities.

Original Link: https://dev.to/chafroudtarek/how-to-integrate-kafka-with-nodejs--4bil

Dev To

An online community for sharing and discovering great ideas, having debates, and making friends

An online community for sharing and discovering great ideas, having debates, and making friendsMore About this Source Visit Dev To