An Interest In:

Web News this Week

- April 2, 2024

- April 1, 2024

- March 31, 2024

- March 30, 2024

- March 29, 2024

- March 28, 2024

- March 27, 2024

5 enhancements that will boost your Node.js app

During your application workflows, there are many aspects needed to ensure the code you write executes with efficiency at its best. Consider you have built a Node.js application. Upon production deployment, you realize your application is getting a lot slower and slower.

Well, at this point, the question running in your mind is what can you do to speed up and run your Node.js app faster? In this article, we will discuss tips and tools you need to massively scale and speed up the Node.js applications.

An overview

Node.js is an open-source cross-platform runtime environment for JavaScript. It is used for running server-side applications. Node.js is used to build applications of all levels that require a data-intensive model.

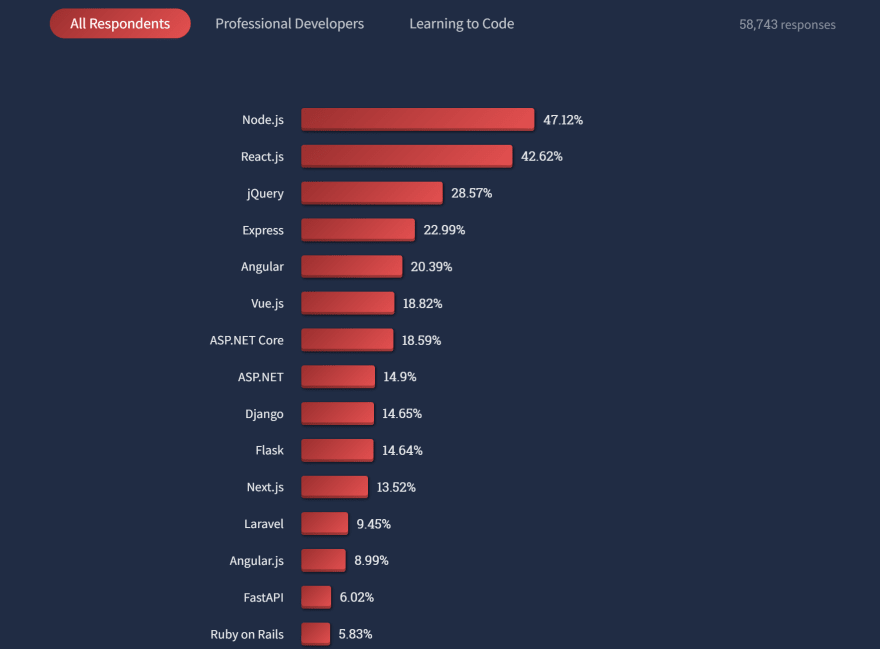

Node.js is dominant in the field of server-side web development. It ranks as one of the most popular Web frameworks and technologies. This Stack Overflow survey gives a transparent look at how Node.js contests with other server-side technologies such as Django, Laravel, Ruby on rails, and Flask.

There are justifications behind of tremendous popularity of Node.js. This includes:

Node.js is very easy to understand. Node.js is built with JavaScript bare bones, the commonly used programming language. Due to JavaScript's popularity, it becomes relatively easy to get you up and running with Node.js.

Node.js has a giant ecosystem, the Node Package Manager (NPM). NPM is a Node.js registry that allows the use and access of open source libraries that cover the entire Node.js web development pipeline. These libraries allow you to install code that you can use on the fly. This conclusively saves a lot of development time while still delivering light, scalable, and high-speed apps.

Node.js is blazingly light and fast, allowing developers to create high-performant applications. It is built on a high-performance V8 engine to compile and executes the JavaScript code.

As a developer, you want to exclusively exploit the Node.js capacity to build highly scalable applications. Nevertheless, you need different tools to ensure your Node.js applications run even faster. Let\'s discuss tips and tools developers can use to optimize and boost the already fast architecture that Node.js delivers.

How to boost your Node.js app?

1. Using a message broker

A message broker is a software that provides stable, reliable communication between two or more applications/subsets of an application. Basically, a message broker runs an architecture technique that allows you to break applications apart while still ensuring asynchronous communication.

In this case, a message is the piece of information that you want to get processed from one application to another. A broker acts as a medium where actual data payloads are transmitted between the applications. A message broker uses a queue system to hold. It manages queues with the order they are received and delivers them to the actual party.

Here is a general architecture of how a message broker works:

Now that we have an idea of what a message broker is. How does it enhance the scalability of an application? To answer this question, let\'s learn the higher-level advantages that a message broker offers:

Simplified Decoupling - A message broker eliminates the dependencies between applications. A message broker act as the middleman between a client and the server. It\'s the work of the server to send data to a broker. A server doesn\'t have to be in direct contact with its message recipient. When the client needs the data, it just gets the messages from the broker at any time. It is not essential to use a discovery methodology to identify the location of server instances. The message broker takes care of these situations.

Increased Architectural Reliability - The server can send messages whether or not the client is active and vice versa. The only component that must be running is a message broker. When a server sends messages, its job is done. It is now the work of the broker to deliver the messages to the appropriate recipients.

Asynchronous Processing - Assume you are running a Node.js full-scale API using the REST architecture. The server and client are tightly coupled together to make requests and responses and exchange data. The two communicate directly using designated endpoints based on the HTTP protocol. What happens here is if the client sends a request, it expects an immediate response from the server. A REST communication is synchronously designed. It works with pre-defined requests that must return a response. If the response fails, undesirable user experiences can happen, such as a timeout error. On the other side, message brokers are Asynchronous. No one has to wait. Timeout errors can never occur in such architectures.

How does this benefit your Node.js apps?

Improved system performance - Message brokers use message queues for asynchronous communication. High-demand processes can be isolated into independent processes. This ensures data transfer is optimized as no system component is ever held up while waiting for the other. That will help accelerate your application performance and enhance the user experience.

Scalability - The server and the client can all expand and contract in response to data demands. Components can keep adding entries to the queue even when demand peaks without fearing the system crash. Likewise, clients can be scaled up and workload distributed based on incoming data.

Great examples of message brokers can be found in this repo, and a new one I recently found is Memphis. It\'s a great fit for node.js/typescript/NestJS (Read more here [Memphis SDKs for Node.js/Typescript] https://docs.memphis.dev/memphis-new/sdks/node.js-typescript)) with a modern dev-first approach.

2. Build Node.js with gRPC

gRPC is an open-source remote procedure call (RPC) framework used to build scalable fast communication microservices. It helps you create a high-performant communication protocol between services. An RPC framework uses a client to directly invoke a function on the server. In simple terms, RPC is a protocol that allows a program to execute a procedure of another program located on another computer without explicitly coding the details of the network interaction. It\'s automatically handled by the underlying framework.

What makes the gRPC framework special?

It uses the HTTP/2 protocol. Architectures such as REST use the traditional HTPP 1.1 as the transfer protocol. This protocol is based on a request-response model using generic HTTP methods, such as GET, POST, PUT, and DELETE. If many clients submit requests, they are each accepted one at a time. HTTP/2 protocol supports a bidirectional communication model alongside the request-response model. It is possible to serve multiple requests and responses simultaneously. This creates a loose coupling between server and client, allowing you to build fast and efficient applications that support streaming with low latency.

It uses Protocol buffers (protobuf) as the message format. When exchanging data using a SOAP protocol, the exchange of information happens over XML. When using REST, the data are exchanged using JSON format. In gRPC, data is exchanged over protocol buffers. Protocol buffers are lightweight, faster, and efficient as compared to both XML and JSON. It has fewer payloads. Under the hood, it performs serialization of the structured data. The protobuf compiler turns the data structure into the protobuf binary format, which is used to describe the communication format between the client and the server.

Language agnostic - The majority of modern languages and frameworks largely support gRPC, such as Node.js, Python, Go Java, PHP, and C#. A client and the server can be built with different languages/frameworks. It is more flexible than conventional APIs since clients can use any function, not only the typical GET, POST, and DELETE methods.

How does running Node.js with gRPC benefit your application:

Faster communication - gRPC uses HTTP/2. This minimizes latency and network bandwidth usage to ensure a smoother user experience. At the same time, it is API-driven, which provides you flexibility in interface design.

Increased application performance - REST uses synchronous calls. This ensures that the server has returned a response before execution continues. However, gRPC Asynchronous queries return instantaneously, and the response is processed as an independent task.

Lightweight messages - Protocol buffers are considered smaller compared to JSON messages with a difference of up to 30 percent

gRPC and Message Brokers help you handle and manage your application messages. Check how gRPC compares to Message Broker.

3. Optimizing Node.js with clustering

Node.js is single-threaded. It uses only one CPU to execute an application by default. This means if you have 8 CPUs on your machine,Node.js threads are spawned to only utilize one CPU even when performing CPU-intensive operations. This hinders the application from utilizing the full power the underlying bare metals have which can lead to a server deadlock situation.

To solve this, Node.js uses clusters. A cluster is a group of node instances running on a computer. In this case, Node.js uses the main CPU as its master instance and other available CPUs as the worker instances.

Node.js clustering allows networked Node.js applications to be scaled across the number of available CPUs. Here is a basic example of node clustering.

This computer has 4 processors. By default, Node.js\' single-threaded nature will only utilize on the CPU. However, we can spawn tasks across the available CPUs using the Node.js cluster module under the hood. Node.js can run the child process and share the server ports while still being able to communicate with the parent Node process. Depending on the number of CPUs provided, it significantly boosts the performance, efficiency, and reliability of your application.

4. Load balancing

Let's assume you have a web app, an online shop to be specific. Users will access your shop using a domain name. This domain will communicate with the server to get things done on the user\'s end. However, when you have large traffic accessing your online shop, the demand for resources will increase.

You may be required to set up an additional server to distribute the traffic. A situation that will make you have multiple replicas of your application. But how do you instruct the users to use the resources from replica servers? If they all connect to the initial server, then you will run out of resources, leaving you with other instances servers unutilized.

At this point, what you need is to balance the traffic to access all servers. And what you are exactly doing is load balancing to distribute the traffic evenly. This gives an optimal performance of your application and ensures no node is getting overloaded.

Load balancing is a process of distributing application tasks within a given set of resources to ensure efficient usability of overall resources. This way, all your client requests get evenly and equally distributed to the backend nodes managing your application.

It is essential to have a load balancer configured to your Node.js in order to scale your deployment based on the resources demand. One of the popular tools used to deploy Load Balancing in Node.js is NGINX. Nginx is an Open Source tool that allows you to configure HTTP and HTTPS Servers for the client traffic.

By disrupting traffic, a load balancer can prevent application failure and increase performance and availability. How does building Node.js Distributed systems with a load balancer benefits your application?

Horizontal Scalability - Distributing your application instance allows it to manage a broader amount of incoming requests.

Reduced server downtime

Flexibility

Redundancy

Efficiency

5. Caching

Caching is the temporary storing of data that is likely to be accessed repetitively. This practice uses a memory buffer to temporarily save application lookups.

A cache streamlines service delivery by ensuring any repetitive task is not retrieved from the server but a memory buffer. This way, if a request is by the client, it will first check any lookups saved in the cache without hitting the server.

When running a server that delivers frequently requested resources from the same request, it increases data delays to the clients. Serving such computations from a cache layer allows you to deliver data and respond to requests with minimum delays.

The first time a request is sent and a call made to the server is called a cache miss. The output will be saved in the cache before returning the data to the user.

If the requested data is found in the cache memory, It\'s called a cache hit. The result will be returned from the cache store and the complex data query doesn\'t need to be processed again.

It is important to always check the cache hit rates and polish the caching strategy accordingly. A cache layer is not infinite. Therefore, you need effective cache management. For example:

Invalidate a cache after a certain period.

Removing cache to ensure caching hit ratio remains high.

Invalidate a cache below certain usage thresholds.

Distributed systems need to complete many API calls to match a single response payload. Running such calls with a cache drastically reduces the cost of data aggregation. Running such Node.js tasks caching can:

Greatly reduce the data query response time.

Improve the scalability of an application.

Reduced server load which greatly increases server performance.

Caching improves database performance. A cached request doesn't have to hit the server, meaning the data request query doesn't have to access your database layer.

Node.js caching tools include:

Redis Cache. Redis uses an in-memory database to store the entire data set, reducing the extra cost of a lookup.

Using caching reverse proxy, such as Varnish Cache. Vanish is an HTTP accelerator tool that allows you to save your server-side requests and responses to reduce the loading times of your Node.js server.

Using Node.js App server HTTP Cache Middleware. It allows you to add a Cache Middleware that connects with the Node.js HTTP to reduce API latency.

Using Nginx for content caching. Nginx caches content of application servers, both static and dynamic, to streamline the client delivery and reduce server load.

Other practices to power up your Node.js app

There are many practices that you can use and ensure Node.js scales your

application. Other practices and tools include:

Practicing asynchronous executions.

Logging and monitoring your application performance.

Writing light and compact code and ensuring you eliminate lines of codes and unused library components.

Practicing memory optimization

Running Node.js using [SSL/TLS]{.underline} and [HTTP/2]{.underline}.

Enhancing data handling techniques, for example, GraphQL vs. REST designs.

Making use of Web Sockets to improve server communication.

Use the Node.js Deflate and Gzip compression middleware to compress server requests and responses.

Conclusion

Building an application is the first step to connecting with your users. Your application requires day-to-day maintenance to maintain a steady experience for these users. This guide helped you learn some of the common strategies and enhancement tools that you can use to boost your Node.js apps.

Original Link: https://dev.to/chegerose/5-enhancements-that-will-boost-your-nodejs-app-3pj5

Dev To

An online community for sharing and discovering great ideas, having debates, and making friends

An online community for sharing and discovering great ideas, having debates, and making friendsMore About this Source Visit Dev To