An Interest In:

Web News this Week

- April 2, 2024

- April 1, 2024

- March 31, 2024

- March 30, 2024

- March 29, 2024

- March 28, 2024

- March 27, 2024

PLAYING SUPER MARIO BROS ON FARGATE (CONTAINERS SERIES) - EP 1

Hi folks, this series will contain several episodes with the goal of explaining how to use a containerized application on AWS in a totally fun way. Well cover several compute topics and at the end have a game running on a fargate cluster on AWS. In the current episode, well understand about containerization concepts and some related topics, run the application that well use in our fargate cluster later on our local machine.

First, lets understand some concepts that will help us understand containers. We need to talk about the kernel, which is a program that manages the connection between hardware and software. Its responsible for allowing applications to run, for reading and writing addresses on disk and memory, manages the use of available resources on the machine, responsible for scheduling processes to run, etc.

Each OS has different implementations for their own kernel, but all are responsible for controlling the hardware.

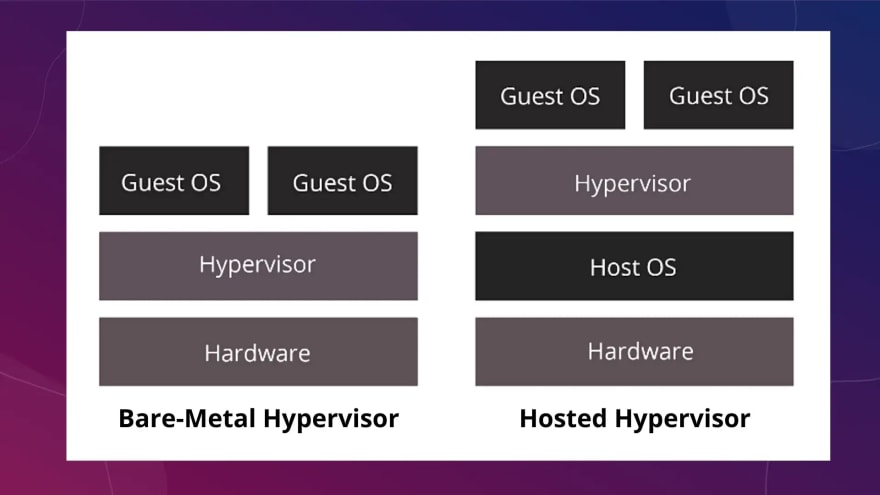

To run an OS inside another its necessary to use the hypervisor, which is a a process that enables one or more OSs to run within a host operating system. In this way, its is function for controlling the access of the OS (virtual machine) to hardware devices.

There are 2 types of hypervisors, the Bare-Metal Hypervisor and the Hosted Hypervisor

In Bare Metal Hypervisor, the software runs directly on the machines hardware, running a virtual operating system on top of a hypervisor layer.

In the Hosted Hypervisor, virtualization happens when an operating system virtualization program is installed on the machines OS,

such as virtualbox.

CGROUPS

We cant help but talk about Linuxs control groups, or cgroups, which were created by Googles engineers. We can define cgroups as a Linux kernel tool that isolates and controls resource usage for user processes.

These processes can be placed in namespaces, which resource allocation management per namespace can be used to limit the overall amount of network usage, memory, CPU usage, etc.

The function of namespaces is to make the resources accessed by a process appear to be the only resources the system has, making namespaces an isolation tool for processes that share the same kernel.

The cgroups made possible the creation of the LinuX Containers. It was one of the pillars in the creation of the containers we are currently working on, having an advantage of cgroups and namespace isolation to create a virtual environment with separate process and network space.

CONTAINERS

Containers are like isolated environments within a server, with the objective of separating responsibilities and being able to isolate the processes of each resource.

A container has a group of processes running from an image that provides all the necessary files. Containers share the same kernel and isolate application processes from the rest of the operating system.

Containers have their own filesystem and allocated IP, are very lightweight, and startup and restart tend to be quite fast as there is no need to boot the Kernel every time.

A container is not the same thing as a VM. According to UOLs blogspot, a container can share the same operating system kernel, can create isolated environments where different applications can run simultaneously, since the division is made based on available resources, such as memory and processing. A virtual machine, on the other hand, allows a physical machine to host other hardware with a different operating system, hard drive and independence from the original hardware.

DOCKER

Docker is the most widely used container technology today, making it the industry standard tool for containerization.

A Docker container is made up of an image. The base image contains the containers operating system, which can be different from the hosts operating system, and this image is made up of a few layers.

The first layer is the base layer, which becomes responsible for booting, containing the kernel and the bootfs boot sector. This is where the cgroups for process control, namespace and device mapper are created.

The second layer is the one that contains an image base. This rootfs layer can be one or more operating systems.

The last layer is the abstraction layer, has write permission, but modifications are not reflected in the base image, just in the layer which the system visualizes. It is in this layer that the user of the container will work and will be able to create new images.

The docker HUB is a repository with only docker images where we can have access to several ready-to-use images.

To learn more about docker, docker tools, image creation, docker storage systems, click here.

HANDS ON

Lets upload our first local container containing the image of our game, which in the future well upload on fargate cluster on AWS.

The first thing is to install Docker. Click here to install. You can check the Docker comands HERE.

Next, download the image. Well not create the image, its already to use at the docker hub.

For that, use the following command:

docker pull pengbai/docker-supermarioIn the previous command, the docker image was downloaded on your machine, now well run a container with the command below to run this image. Lets pass the necessary ports as a parameter to up the image.

docker run -d -p 8600:8080 pengbai/docker-supermarioRun with mapping containers 8080 port, and use your navigator http://localhost:8600

Now you can play the game locally.

To be continued

Original Link: https://dev.to/aws-builders/track-containers-on-fargate-1-3k10

Dev To

An online community for sharing and discovering great ideas, having debates, and making friends

An online community for sharing and discovering great ideas, having debates, and making friendsMore About this Source Visit Dev To