An Interest In:

Web News this Week

- April 19, 2024

- April 18, 2024

- April 17, 2024

- April 16, 2024

- April 15, 2024

- April 14, 2024

- April 13, 2024

Stream messages from Stripe to Kafka

Data grows exponentially across every application. That's why it's crucial to have a seamless way to send events data from different applications into a central event streaming platform like Apache Kafka.

In today's post, we'll walk through how to easily send messages or events from Stripe into Kafka using Buildable's Node SDK.

Prerequisites

Before we dive in, you'll need a few things to complete this tutorial:

- A Buildable account

- A Stripe account

- Confluent for Apache Kafka

- A Kafka server

Creating a Stripe connection

Inside your Buildable account, we need to create a Stripe connection to push messages from Stripe into Buildable. If you do not have a Buildable account yet, do not worry, you can get started for free.

Navigate to theConnection taband hit the+ Newbutton. Here, you'll see a list of 3rd party apps, scroll down and select Stripe.

Give your connection a name, e.g.,Stripe connection, and type in your Stripe API key.

Next, hitConnectand subscribe to the following events:

- customer.created

- customer.deleted

- customer.updated

Setting up your Buildable Secret Key

We also need to create a Buildable secret key that we will use in our NodeJS app.

To do this, head over to theSettingspage. From the left panel, selectSecret Keysand create a new one.

Copy your secret keybecause we'll be using it shortly.

Creating a NodeJS client

Go over to your Confluent account, and in a new or existing cluster, create a new topic calledintegrations.

To connect to our topic, let's selectconfigure a clientand choose NodeJS as the language.

Now, we create aKafka Cluster API key. Call this key Buildable and download the file.

Notice config variables show up, which we'll be using in a bit, so keep them handy.

Adding environmental variables

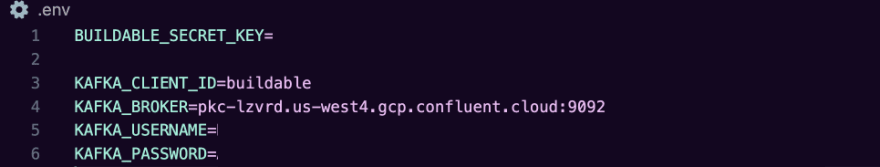

Now, it's time to add in our environmental variables. In our NodeJS project, create a .env file and define the environmental variables used in our app.

Do you remember the secret key you created earlier in Buildable? We'll paste it here as ourBUILDABLE_SECRET_KEY.

You'll also add in the Kafka variables that showed up after you created your Kafka Cluster API key.

Connecting to the Kafka Server

In order to use Kafka, we need to connect to our Kafka server. Let's install the kafkajs npm package by running npm install kafkajs.

We then create a new Kafka instance by specifying the clientId, brokers, ssl and sasl.

Finally, we create and connect to the Kafka producer that helps to publish messages to Kafka.

const { Kafka } = require("kafkajs");// Connect to Kafkaconst kafka = new Kafka({ clientId: process.env.KAFKA_CLIENT_ID, brokers: [process.env.KAFKA_BROKER], ssl: true, sasl: { mechanism: "plain", username: process.env.KAFKA_USERNAME, password: process.env.KAFKA_PASSWORD, },});// Create the producerconst producer = kafka.producer();// Connect to the producerawait producer.connect();Listening to messages from Stripe

Since messages from Stripe are being sent first to Buildable, we need to install Buildable's Node SDK into our app by running npm install @buildable/messages

Next, let's define the exact events we subscribed to in Buildable. If you recall them, they were "customer.created", "customer.deleted" and "customer.updated".

We also configure a listener that listens to the messages from Stripe.

// Select events to listen onconst events = [ "customer.created", "customer.deleted", "customer.updated"];const listenerConfig = { platform: "stripe", label: "my-stripe", txKey: "kafka.message.sent",};async function main() { // Listen to all events events.forEach((eventName) => client.on(eventName, async ({ payload, event }) => { // Create Kafka message... }, listenerConfig) );}main().catch(console.error);Publishing messages to Kafka

We're at a good point to set up the function that sends the messages to Kafka. Let's create an async function calledmainthat listens to all our events and publishes them to Kafka.

To publish messages to the Kafka cluster, we need to use the producer.send method with topic and messages signatures.

await producer.send({ topic: "integrations", messages: [ { key: "unique-key", value: JSON.stringify({ event: "customer.created", payload: {}, }), }, ],})With that, we just completed this tutorial

Here's the final code:

// Init the SDKconst { Kafka } = require("kafkajs");const { createClient } = require("@buildable/messages");const client = createClient(process.env.BUILDABLE_SECRET_KEY);// Create Kafka Clientconst kafka = new Kafka({ clientId: process.env.KAFKA_CLIENT_ID, brokers: [process.env.KAFKA_BROKER], ssl: true, sasl: { mechanism: "plain", username: process.env.KAFKA_USERNAME, password: process.env.KAFKA_PASSWORD, },});// Select the Kafka topicconst topic = "integrations";// Select the events to listen onconst events = [ "customer.created", "customer.deleted", "customer.updated"];const listenerConfig = { platform: "stripe", label: "my-stripe-connection", txKey: "kafka.message.sent",};async function main() { // Create and connect to Kafka producer const producer = kafka.producer(); await producer.connect(); // Listen to all events events.forEach((eventName) => client.on(eventName, async ({ payload, event }) => { // Create Kafka message return await producer.send({ topic, messages: [ { key: event.key, value: JSON.stringify({ event: `${listenerConfig.platform}.${listenerConfig.label}.${eventName}`, payload, }), }, ], }) }, listenerConfig) );}main().catch(console.error);If you trigger an event like creating a customer in Stripe, you should now see this event show up in Kafka.

Simplify your Kafka streaming process with Buildable

Streaming messages from your 3rd party applications into Kafka via Buildable doesnt have to be complex. In this tutorial, we learnt how to easily send Stripe messages to Kafka, but you can connect any other application to Buildable and stream directly to Kafka.

Hopefully, this example gave you the insights you need to stream messages from any application into Kafka. With our free tier Buildable account, you can begin to stream messages from multiple platforms straight into Kafka, so go ahead and explore!

If you have any questions, please reach out via Twitter or join our Discord community. Till next time, keep building with Buildable!

Original Link: https://dev.to/buildable/streaming-messages-from-stripe-to-kafka-3d9a

Dev To

An online community for sharing and discovering great ideas, having debates, and making friends

An online community for sharing and discovering great ideas, having debates, and making friendsMore About this Source Visit Dev To