An Interest In:

Web News this Week

- March 22, 2024

- March 21, 2024

- March 20, 2024

- March 19, 2024

- March 18, 2024

- March 17, 2024

- March 16, 2024

Coding a manga downloader with NodeJS

Overview

I am currently studying French on my own and one of the strategies I deployed for that is reading mangas in French. But! French manga is something extremely hard to find - legally, that is. You see, the oficial manga publishers have little to no incentive on translating their mangas to French since the target audience is so scarce, it is different on the community side though, mangas are often translated to French by the community and - although technically illegal - are the only way to properly consume mangas in the language.

How

Alright, alright, we have seen the motivation. But how exactly can we do that? The technique I'm going to use here is the famous and controversial Web Scraping.

Web scraping is the process of collecting structured web data in an automated fashion. Its also called web data extraction. Some of the main use cases of web scraping include price monitoring, price intelligence, news monitoring, lead generation, and market research among many others.

- Colm Kenny

The goal is simple: download individual images from a given manga of the French website sushi scan and convert them to PDF so that I can read it on my phone afterwards.

Structure

Our app should follow roughly something like this:

Ask which manga we want to download and were to save it

Connect to sushi scan and load all images from the manga

Download all images from the given chapter/volume

Convert all images to a single pdf file

Done!

The Interface

Since it's a simple tool that is going to be used only by me, I'll not bother to implement a whole UI, a simple CLI (Command Line Interface) will suffice. For that I'll be using the following libraries: InquirerJS for the CLI itself and NanoSpinner for loading animation.

Main menu

The main menu consists of three options:

- Download manga

- Search mangas

- Combine images into a single pdf

I'll focus only in the first one since it incorporates all of the others.

Downloading the manga

First, let's ask the user for some information:

await askLink();await askDestination();await askName();These three functions are self-explanatory, they ask for the sushi scan manga link, were does the user want the manga to be saved, and give the final pdf a name. I'll show just one of the functions here, if you want to peek the whole code you can go to my repo here.

async function askLink() { const promptlink = await inquirer.prompt({ name: "link", type: "input", message: "Chapter link", default() { return chaplink; }, }); chaplink = promptlink.link;}This is a basic example of who to use inquirerJS, again, I want to reinforce that I am not using best practices here - far from it - since it's just a simple and personal project. But I highly recommend checking the docs for how to properly use the library to its full potential.

Let's say I want to download the 14th volume of the manga Magi from this link. How does the program scrap the images? To achieve that, I'll be using the awesome scraping library Puppeteer.

First, we create a browser, puppeteer mimics a real browser to bypass anti-scraping strategies.

const brow = await puppeteer.launch();Now, we can create a page, set a viewport and go to our target page.

const page = await brow.newPage();page.setViewport({ width: 414, height: 896 });//goes to our target page and waits for a delay//chaplink -> chapter linkawait page.goto(chaplink, { waitUntil: "load" });await page.waitForTimeout(delay);We loaded our page, awesome! Let's start scraping! The things is, web scraping - 90% of the time - has to be tailored to perform a specific task, so my solution - although useful for my use case - is probably worthless for other scenarios. Having said that, I'll just give you a quick look behind the code.

const spinFullPage = createSpinner("Loading images...").start();//select reading mode to be page by page and not full scrolingawait page.select("#readingmode", "single");//wait for 500msawait page.waitForTimeout(500);//loading completespinFullPage.success({ text: "Loaded images." });const spinImages = createSpinner("Processing images...").start();//wait until page selector apears in the screenawait page.waitForSelector("#select-paged", { timeout: 100 });//to scrap the images, we need to know how many pages there are in the chapter/volume, so we get the inner html from the page selector and count how many values there are inside itlet innerHTML = await page.$eval("#select-paged", (e) => { return e.innerHTML;});//this is the length of the chapter/volumelet tamanho = innerHTML.toString().split("value").length - 1; console.log(`TAMANHO ->> ${tamanho}`);images = [];//for each page of the manga, get the image link and store it in imagesfor (let i = 0; i < tamanho; i++) { let atual = await page.$eval("#readerarea > .ts-main-image", (e) => { return e.src; }); images.push(atual); //push to downloads a promise, the link and where to save it (we got that from the user before) downloads.push(downloadImage(images[i], `./${out}/${name}-${i}.jpg`)); //wait a while before passing to the next page and repeating await page.select("#select-paged", `${i + 2}`); await page.waitForTimeout(100); } spinImages.success({ text: "Got all the links." });That's the gist of it, there's a lot more to it than that but I hope that gives you an idea on how it's done.

Creating the PDF

Now that we have all the image links, we just need to download all the images and combine them into a single pdf file. I accomplished that using the library PDFKit. Here's a quick look into how I add an image.

const manga = new PDFDocument({ autoFirstPage: false, size: [960, 1481], compress: true, });I recommend setting autoFirstPage to false, but that's up to you. The size is not always 960x1481, that's just the default I set it to, every time I download a manga I check it's size and set the pdf accordingly.

for (let i = 0; i < l; i++) { manga .addPage({ margin: 0, size: [width, height] }) .image(`./${out}/${name}-${i}.jpg`, 0, 0, { height: manga.page.height, }); if (autodelete) fs.unlinkSync(`./${out}/${name}-${i}.jpg`, () => {}); }Adding the image is simple enough, we add a page, then an image to the page giving the file path and size. After adding the image, we delete it from disk automaticaly.

Conclusion

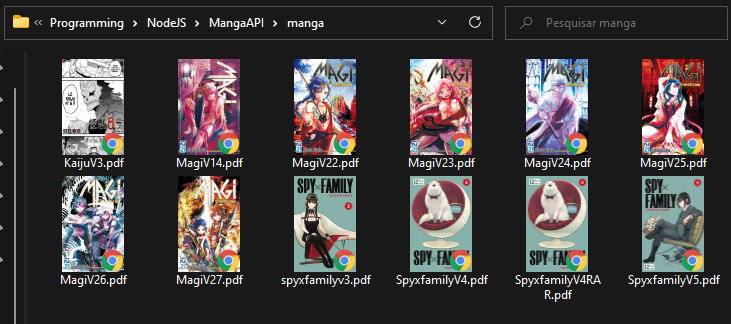

We have achieved our goal, we downloaded a manga from a website and turned it into a single pdf file! This is extremely helpful for my French studies and I hope it inspired you to do something related as well. I'll end here, as always, stay curious!

P.S: You can subscribe to my youtube channel for simmilar content and to my other social medias at my website.

Original Link: https://dev.to/reinaldoassis/coding-a-manga-downloader-with-nodejs-3554

Dev To

An online community for sharing and discovering great ideas, having debates, and making friends

An online community for sharing and discovering great ideas, having debates, and making friendsMore About this Source Visit Dev To