An Interest In:

Web News this Week

- March 21, 2024

- March 20, 2024

- March 19, 2024

- March 18, 2024

- March 17, 2024

- March 16, 2024

- March 15, 2024

I Wrote a Script to Download Every NFT

By now, you might have learned how to right click and save or screenshot NFTs. But compared to what I'll show you in this post, that'll just look like petty theft. I'm going to show you how to create a script to download tens of thousands of NFTs in just minutes.

Memes aside, I thought this was a fun project to get more familiar with Node.js and Puppeteer, a library you can use for web scraping and more. And if that sounds interesting, read on. If you want to follow along better, here's the GitHub with the code.

(If you'd like to watch this post in video format, click here.)

Casing the Joint

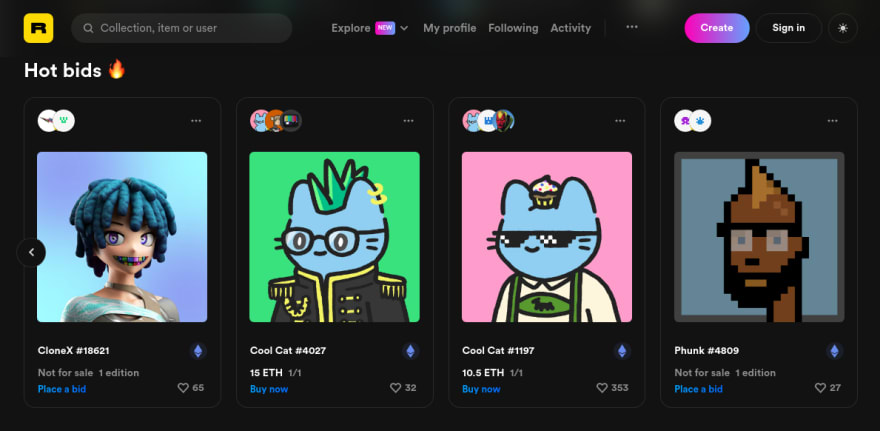

We're going to be lifting the NFTs from Rarible, one of the most popular NFT marketplaces.

Here you can buy JPEG images of monkies, anime girls, cats, and more. But what we're after are the monkies. Some of the most sought-after NFTs. But I don't want to just save one or two of them--I want ALL of them. To be more precise, all 9,800 of them in this collection.

Installing Dependencies

I'm going to be writing this script in Node.js because I never learned a real programming language. And we're going to be using a library called Puppeteer in order to lift the NFTs. What Puppeteer does is basically starts up a remote-controlled version of Chromium that we can program to do our bidding. Let's initialize the project and install Puppeteer.

npm init -ynpm install puppeteerWriting the Script

Let's create our script. I've created a file called index.js and added the following:

const puppeteer = require("puppeteer");const fs = require("fs");const path = require("path");const url = "https://rarible.com/boredapeyachtclub";(async () => { console.log("Loading..."); const browser = await puppeteer.launch({ headless: true }); const page = await browser.newPage(); await page.goto(url); await page.setViewport({ width: 1200, height: 800, });});Let's explain what's going on here. First, we're importing Puppeteer and a couple of Node.js libraries that will allow us to save files to our local machine.

Next, we're setting the URL of the page that we're going to be lifting the images from. This can be any Rarible collection page.

Finally, we're starting the browser with Puppeteer, navigating to the URL, and setting the viewport dimensions. The await keywords will ensure that the previous command will finish before the next one runs. This is all from the Puppeteer documentation, so it's not rocket science.

If this is all correct, then when we run the script with node index.js, it should open up a Chromium window and navigate to the URL.

Looks good so far. But there's more that needs to be done.

Getting the Name of the Collection

We want to grab the name of the collection that we're downloading and create a folder to deposit all of our loot into.

We can get the name from the page title. However, it doesn't load in the name of the collection until the entire page has been loaded. So, we have to wait until the React app has been completely loaded.

I opened up the devtools and found a class name that we can hook onto. There's a div with the class of ReactVirtualized__Grid that is the grid that holds all the images. Once that's loaded, the name of the collection has already been loaded in the page title. All we need to do is wait for this element to load, then we can proceed.

await page.waitForSelector(".ReactVirtualized__Grid");const pageTitle = await page.title();const collection = await pageTitle.split("-").shift().trim();if (!fs.existsSync(collection)) { fs.mkdirSync(collection);}We're using the Puppeteer method waitForSelector to hold off until this element is loaded. After that, we grab the page title, do a bit of JavaScript string manipulation to get the right value, and finally create the directory with Node (if it hasn't already been created).

We now have a folder to put all the images in! Let's fill it up.

Downloading the Images

This is the meat of the script. What we want to do is get all the resources that are downloaded to the page. That includes HTML, CSS, JS, and images. We only want the images, but only the NFTs, not any logos, avatars, or other images.

If you look in the network tab of the dev tools, we can see all the images being loaded. We can also see that all of the NFTs are loaded in with a path containing t_preview. None of the other images on this page are from the same path. So, if we sort out these images with these URLs, we can single out the NFTs from all the noise.

let currentImage = 1;page.on("response", async (response) => { const imageUrl = response.url(); if (response.request().resourceType() === "image") { response.buffer().then((file) => { if (imageUrl.includes("t_preview")) { const fileName = imageUrl.split("/").pop() + ".avif"; const filePath = path.resolve(__dirname, collection, fileName); const writeStream = fs.createWriteStream(filePath); writeStream.write(file); console.log(`${collection} #${currentImage} saved to ${collection}/${fileName}`); currentImage++; } }); }});There's a lot going on here, but we're getting all the resources, selecting the images, then only getting the ones we want. After that, we use some string manipulation to get the filename and save them as AVIF files (a next-gen image format you can learn more about here). Then we're saving these to the new folder we created with some Node.js methods. Finally, we're just logging to console the image that's just been downloaded and how many images have already been downloaded.

Phew! That works, and we're finally downloading some images. But the images are being lazy-loaded. That means they aren't being loaded until you actually scroll down the page. That's great from a user perspective, as the users are only loading in images they can actually see, but not so much from ours. Let's make an function to scroll down the page for us, and click the "Load more" button that keeps you from seeing all the images.

The Autoscroller

In order to start scrolling, we just want to run some JavaScript on the page to scroll it. We can do that with Puppeteer's evaluate method. This will run some JavaScript on the page, same as if you had written it in the dev tools console.

async function autoScroll(page) { await page.evaluate(async () => { await new Promise((resolve) => { let totalHeight = 0; let distance = 500; let timer = setInterval(() => { let scrollHeight = document.body.scrollHeight; window.scrollBy(0, distance); totalHeight += distance; if (totalHeight >= scrollHeight) { clearInterval(timer); resolve(); } }, 1000); }); });}What this does is jumps down on the page 500 pixels, and sets a timer to do it again every second. We're scrolling by 500px/second. A little slow, but if I make it faster it could scroll too fast and skip saving some images. Not good. Then, with totalHeight, we're saving how much distance we've scrolled already and comparing it to the total height of the page, scrollHeight. Once we're at the bottom, we're going to stop the setInterval and resolve the promise. No more scrolling.

However, once we're at the bottom, we still need to click the "Load more" button.

We need to tell Puppeteer to run some JavaScript on the page to find all the buttons and narrow it down to the button with the text "Load more". There's no unique ID or class on this button, so we have to find it like this. Finally, we click on the button with help from Puppeteer. Finally finally, we resume the autoscroll function now that there are no more buttons to click.

await autoScroll(page);await page.evaluate(() => { const elements = [...document.querySelectorAll("button")]; const targetElement = elements.find((e) => e.innerText.includes("Load more")); targetElement && targetElement.click();});await autoScroll(page);After all that, we can close the browser once we've gotten to the bottom of this page containing 10,000 NFTs.

await browser.close()Running the Script

That's it! We can now run the script and see if it works.

node index.jsActually, this will take a while because we have to scroll down and save 10,000 images. Grab a cup of coffee or something and stretch your legs while you're waiting.

...

All right, we're back. Let's take a look at what we have here...

What a haul! We now have millions of dollars worth of NFTs on our computer. Not bad for a day's work. What am I going to do with all of these monkey pictures??

I've put the code on GitHub if you want to pull an NFT heist like me--or just play around with Puppeteer some more. It's pretty fun.

That's all for now. I think I'm just going to be sitting over here counting my fat stacks of NFTs.

Original Link: https://dev.to/ericmurphyxyz/i-wrote-a-script-to-download-every-nft-5fgl

Dev To

An online community for sharing and discovering great ideas, having debates, and making friends

An online community for sharing and discovering great ideas, having debates, and making friendsMore About this Source Visit Dev To