An Interest In:

Web News this Week

- April 19, 2024

- April 18, 2024

- April 17, 2024

- April 16, 2024

- April 15, 2024

- April 14, 2024

- April 13, 2024

Manage webhooks at scale with AWS Serverless

Webhooks are still a preferred choice of many organizations to communicate with 3rd party services. Since webhook calls are event-driven, building a webhook management system with Serverless is a great choice. In this post, I am going to discuss how to build such a webhook management system with AWS Serverless.

In an Application Tracking Systems (ATS), whenever a candidate related event occurs (ex: candidate created, applied for a job, candidate state changed, etc), it might need to call to a registered webhook.

There are 3 parts to this implementation.

- Webhook registration.

- Webhook call for the given candidate event.

- View webhook call history.

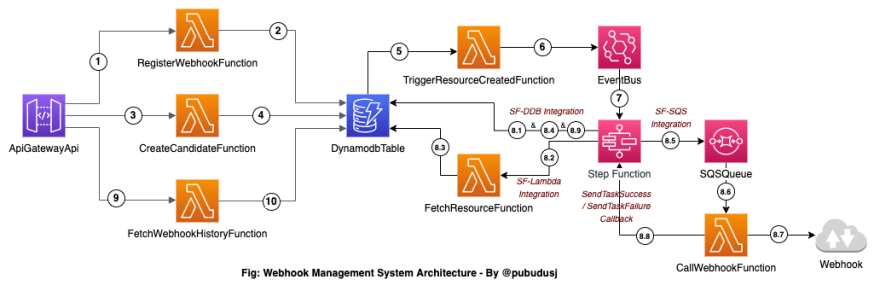

Architecture

How it works

Webhook Registration

- There is an API exposed in the API gateway which proxy to a lambda function (RegisterWebhookFunction).

- This API accepts companyId, URL, and the eventType (ex: candidate.created).

- This lambda function generates a hash token which will be used to validate the incoming webhook call. These data will be saved in a DynamoDB table and the generated hash token will be returned as the response.

Call webhook

- To simulate 'candidate.create' event, I use an API endpoint, which accepts companyId and other candidate's profile data.

- This data will be received by the CreateCandidate lambda function and data will be saved to the same DyanamoDB table.

- As you see, it is not required to use the API endpoint to create the candidate, but the only thing matters here is to add the candidate data to the DynamoDB table.

- Once the candidate data is saved in the DynamoDB table, the TriggerResourceCreated function will be executed using DynamoDB Streams.

- TriggerResourceCreated function has a filter in place for the DynamoDB stream so it checks the eventName and the type of the resource. In this example, it checks if the eventName is "INSERT" and the resource type is "candidate". If so, the lambda function is executed and an event will be published to the Event Bridge Event Bus.

- There is a Step Function configured to be executed based on an EventBridge Rule. EventBridge event's detail object will be the input data for the Step Function execution.

Within the Step Function

- In the input of the step function, only the companyId, eventType and resourceType (ex: candidate) and resourceId (ex: candidateId) included.

- First, Step Function uses DynamoDB integration to get webhook by companyId and eventType.

- If the webhook exists, the data goes into a transform state, else, the execution will be skipped.

- Once the webhook data is transformed, the next step is to fetch resource data given the resourceId and resourceType. For that, the FetchResourceData lambda function is used.

- If the resource data does not exist, execution is set as failed.

- If the resource data exists, the CreateWebhookCall lambda function is triggered to prepare the webhook call. This will create a record in the DynamoDB table with the webhook payload and the validation token with the status 'pending'.

- Then those resource data along with the webhook data sent to an SQS queue.

- CallWebhook function will consume this SQS queue to call the webhooks with the resource data in the payload. Here, a verification token is also included to verify the webhook call authenticity from the external end. For this, the hash token generated when registering the webhook will be used.

- In the state machine, this step is set up with retries enabled, so it will retry 3 times if an error occurred. Also, there will be a 1 minute wait time between each retry.

- If the webhook call was unsuccessful even after all the retries, the state execution will be notified using the sendTaskFailure callback along with some additional data about why this has failed.

- If the webhook call is successful, the state execution will be notified using the sendTaskSuccess callback.

- In either case, in the next step, the Step function has a DynamoDB integration to update the webhook call data with its status (as the success/failed) and the additional information from the webhook call.

Get webhook call history

- An API endpoint is provided to proxy to a lambda function to fetch data from the DynamoDB table for a specific companyId.

- A list of all the webhook calls made will be returned.

How to set up

You can set this up in your own AWS account using AWS SAM. Also, to test/demo the application, I have included a single-page app built with VueJS (in the frontend directory).

- Source code for this application is available at https://github.com/pubudusj/webhook_management

- To set up backend, run

sam build && sam deploy -gwithin the backend directory. - Once the backend is set up, copy the ApiBaseUrl parameter and copy it to the frontend directory's .env file as the value of

VUE_APP_API_BASE_URLenv variable. - Run

npm run serveto test this in your local machine orrun run build --productionto output the build.

Demo

You can see this functionality in action at https://webhooks.pubudu.dev

You can create a webhook providing a URL and a companyId. Then create a user for the same companyId. Then if you search the history with the same companyId, you will see a record with 'pending' status. If you search again, you can see the status has changed.

Please note: Since the retry is in place for the failed webhook calls, it will take about 2,3 minutes to set the webhook call as failed. Success ones will be updated almost instantly.

Please note: Webhook call can be verified matching the token value in the payload with the output of hmacSHA256(candidateId + createdAt, signingToken). Here singingToken is the hash that generated when creating the webhook.

Key points/Lessons learned

- Here I used DynamoDB single table design. So, there will be a lot of events generated into the DynamoDB stream. Luckily, with the recent feature of filtering streaming data including the DynamoDB Streams, now lambda can only use filtered events based on a specific pattern. (Previously, this has to be handled within the Lambda function).

- In the step function's DynamoDB integration, GetItem integration returns data with all the data type keys. There is no way to filter them out within Step Function unless you know the exact data and object structure. So, here I had to use a lambda function to fetch data from DynamoDB instead of the direct integration.

- Since this system relies on the external webhook URL availability, the retries are used within the Step Function. Setting up retries and waiting time between the retries are quite easy.

- Here I used Step Functions Workflow Studio to create and export the state machine.

- For the SF-DynamoDB integrations, I used native optimized integration instead of the new SDK integration. Both should give the same performance.

- For demo purposes, I created the event bus within the same stack, however, in a real scenario, this can be a common event bus shared by other services.

Feedbacks are welcome

Please try this and let me know your thoughts.

Keep building! Keep sharing!

Original Link: https://dev.to/aws-builders/manage-webhooks-at-scale-with-aws-serverless-fof

Dev To

An online community for sharing and discovering great ideas, having debates, and making friends

An online community for sharing and discovering great ideas, having debates, and making friendsMore About this Source Visit Dev To