An Interest In:

Web News this Week

- April 25, 2024

- April 24, 2024

- April 23, 2024

- April 22, 2024

- April 21, 2024

- April 20, 2024

- April 19, 2024

Softmax Activation Function with Python

The softmax function is the generalized form of the sigmoid function for multiple dimensions. It is the mathematical function that converts the vector of numbers into the vector of the probabilities. It is commonly used as an activation function in the case of multi-class classification problems in machine learning. The output of the softmax is interpreted as the probability of getting each class.

The mathematical expression of softmax function is,

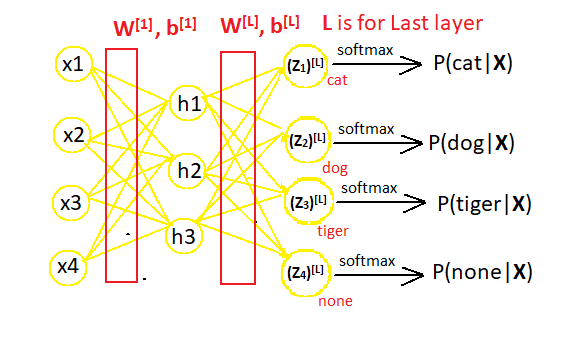

Lets learn about the working of the softmax function with an example. Lets consider a neural network that classifies a given image, whether it is of cat, dog, tiger, or none. Let X is the feature vector (i.e. X = [x1, x2, x3, x4]).

We normally use a softmax activation function in the last layer of a neural network as shown in the figure above.

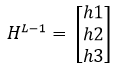

In the neural network shown above, we have

Where,

, calculated values at layer (L-1),

is the weight matrix.

m = total nodes in layer L-1 and n = nodes in output layer L.

For this example, m = 3, n = 4.

And,

is the bias matrix, n = 4 in this example.

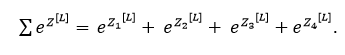

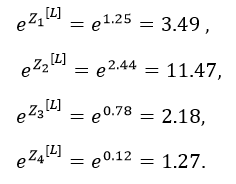

Now we calculate the exponential values of elements of matrix Z [L].

And,

The probabilities of being in different classes given input X are calculated as follows.

On the basis of this probability distribution, our neural network classifies whether the given image is of cat, dog, tiger, or none.

Now lets make it clearer by taking some numeric values.

Suppose we input an image and obtained these values

Then,

And,

Therefore the probability distribution is calculated as,

By observing the probability distribution, we can say that the supplied image is of a dog.

Now let's see how we implement the softmax activation function with python

Python Code:

import numpy as npdef softmax(Z_data): exp_Z_data = np.exp(Z_data) #print("exp(Z_data) = ",exp_Z_data) sum_of_exp_Z_data = np.sum(exp_Z_data) #print("sum of exponentials = ", sum_of_exp_Z_data) prob_dist = [exp_Zi/sum_of_exp_Z_data for exp_Zi in exp_Z_data] return np.array(prob_dist, dtype=float) Z_data = [1.25,2.44,0.78,0.12] #for cat, dog, tiger, nonep_cat = softmax(Z_data)[0]print("probability of being cat = ",p_cat)p_dog = softmax(Z_data)[1]print("probability of being dog = ",p_dog)p_tiger = softmax(Z_data)[2]print("probability of being tiger = ",p_tiger)p_none = softmax(Z_data)[3]print("probability of being none = ",p_none)The output of this code is:

probability of being cat = 0.19101770813831334probability of being dog = 0.627890718698843probability of being tiger = 0.11938650086860875probability of being none = 0.061705072294234845

Original Link: https://dev.to/keshavs759/softmax-activation-function-with-python-2cpa

Dev To

An online community for sharing and discovering great ideas, having debates, and making friends

An online community for sharing and discovering great ideas, having debates, and making friendsMore About this Source Visit Dev To