An Interest In:

Web News this Week

- March 21, 2024

- March 20, 2024

- March 19, 2024

- March 18, 2024

- March 17, 2024

- March 16, 2024

- March 15, 2024

Testing the Async Cloud with AWS CDK

One of the best optimizations we can make when adopting cloud and serverless technologies is to take advantage of asynchronous processing. Services like EventBridge, Step Functions, SNS and SQS can form the backbone of highly scalable, reliable, cost-effective and performant job processing. Cloud services like these mean we no longer have to worry about a single process going out of memory or a spike in traffic causing critical business functions to fail when we need them most.

Adopting asynchronous architectures introduces new challenges around test automation. Nearly all automation testing is built around the idea of call and response synchronous processing. We call the web server and test the html string returned. We POST to the /widgets endpoint, receive a 200 OK response and then can GET our new widget. Tools like Selenium and Cypress aren't suited to workflows that involve asynchronous background processing. At best, you can fire an event and then poll for the eventual desired result.

While the idea of testing frameworks for asynchronous processing hasn't really hit the mainstream, there are some great articles out there. I highly recommend following the work of Sarah Hamilton and Paul Swail and their approaches to solving this problem using libraries like aws-testing-library and sls-test-tools.

These approaches share some of the same drawbacks:

- They rely on an external credential or key to access the AWS account in question.

- They require a wait or sleep step to allow AWS time to process the asynchronous event.

- There's no real CI/CD integration happening. A failing test won't cause a deployment to roll back.

Read enough already? Check out my source code.

Table of Contents

- External Credentials

- Don't Sleep

- Automatic Rollback

- Custom Resource Provider Framework for Test

- Payment Collections App

- Test Event

- Complete Event

- Provider

- Test Run

- Bonus: EventBridgeWebSocket

- Infrastructure Testing

- Next Steps

External Credentials

When writing tests using something like aws-testing-library or sls-test-tools, we must have credentials to an AWS account that at least lets us send a few events and subscribe to or query the results in order to perform assertions. Depending on the stance of our organization about cloud access, this could be completely fine or it could be a never-gonna-happen dealbreaker. Often this kind of approach will be OK for a development environment, but it could be unlikely to fly in production.

We may be restricted to running such tests in a CI/CD pipeline. That could get us past a security review, but it might also slow the feedback loop of writing tests, resulting in fewer overall tests written.

The safest way to solve this problem is to remove external access from the equation. If we run our tests from a resource within the AWS account, such as a Lambda function, then while we still need normal IAM permissions, we don't need to create any additional external roles. Tests can be run in production without violating the least privilege principle of giving our deployment role the minimum grants required to create the resources we need.

Don't Sleep

Sleep or wait statements are the bane of test automation. When a test fails, can we "fix" it by increasing the timeout? Does doing so reflect the normal operation of using cloud or have we introduced some instability to our system that is now being normalized by making the tests take longer?

In the end, we have to pin our sleep statement to the longest our process can possibly take or decide to be tolerant of test failures. We can mitigate this problem by using polling instead of sleep. To be fair, the solutions given by Sarah and Paul don't preclude polling, but you are on your own to implement it.

Automatic Rollback

Once we have a really good automation suite, we should wish to connect it to our CI/CD process such that if the tests fail, we roll back the deployment. Assuming we are using AWS and CloudFormation, the only lever we really have to pull there is to attach an alarm to a test suite. This could be achieved by having the test publish custom metrics that trigger the alarm and a potential rollback. This would be yet another permission needed to our test runner role and isn't something natively supported by any testing library.

Custom Resource Provider Framework for Test

This is not the first time I've written about Provider Framework. I think the CDK implementation of CloudFormation Custom Resources is one of the coolest things I've come across recently. I'm constantly impressed by the things I can do with it. Let's examine how it solves some of the problems I've outlined above.

Our tests will be executed by Lambda functions instead of via some external tool. These functions will still need normal IAM roles to access the resources needed to perform the test, but the functions themselves are not accessible to any resource or role external from the account. This means we can run tests in production without risking any data exposure.

We get built-in polling in the isComplete step. This is a Lambda function that can be executed at queryInterval that can perform some sort of assertion and then return a boolean value indicating whether the test is complete or we should continue polling.

Provider Framework also has built-in error-handling. Throwing any error will pass a "FAILED" response to CloudFormation, which in turn will trigger a rollback.

These advantages are balanced by there not being a good assertion library ready to use in a Lambda function and by the potential for a poor developer experience if we find ourselves debugging the custom resource itself and having to perform frequent deployments to write a test. I think those problems can be solved with some additional tooling, but nothing exists to date.

Payment Collections App

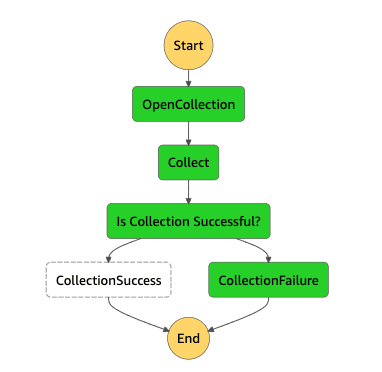

So let's see a test in action. The design of this application is that we receive an asynchronous notification from our payment provider indicating whether or not a payment was successful. If the payment was successful, we update our database to indicate that. Otherwise we trigger a collections workflow.

Payment events (success or failure) come to us via EventBridge. Our Collections workflow is managed by Step Functions. The record of payment and current status is stored in DynamoDB.

The scenarios we'd like to test are:

- Receive a successful payment event and record it in our table.

- Receive a failed payment event and kick off the collections workflow, run the collection, and record the eventual result in our table.

Test Event

To write the test we start with a Lambda function that will initiate the events we want to test. In this case, we're going to send two events to our event bus. Here is a TypeScript function that will do exactly that.

import EventBridge from 'aws-sdk/clients/eventbridge';import type { CloudFormationCustomResourceEvent } from 'aws-lambda';const eb = new EventBridge();export const handler = async (event: CloudFormationCustomResourceEvent): Promise<void> => { if (event.RequestType === 'Delete') { return; } try { const { Version } = event.ResourceProperties; const events = ['success', 'failure'].map((status) => eb .putEvents({ Entries: [ { EventBusName: process.env.BUS_NAME, Source: 'payments', DetailType: status, Time: new Date(), Detail: JSON.stringify({ id: `${Version}-${status}` }), }, ], }) .promise(), ); await Promise.all(events); } catch (e) { console.error(e); throw new Error('Integration Test failed!'); }};We're able to take advantage of the CloudFormationCustomResourceEvent type from the @types/aws-lambda package. Because Custom Resources support the full lifecycle of CloudFormation, we need to evaluate "Delete" as a no-op. We don't want the test to run if the stack is being deleted, as it would obviously fail since the necessary resources won't exist.

The rest of the function simply uses putEvents to propagate two test events, a success and a failure. Note that we are pulling the Version from the Custom Resource and passing that as the event detail.

Complete Event

The complete event will be called at the interval specified in our code (defaulting to 5 seconds) until it returns a positive result, throws an error or totalTimeout (default 30 minutes and max of two hours) elapses. As before, if this is a stack deletion event, we want the test to just pass as our business logic shouldn't be under test. If we're undergoing a create or update event, then we'll want to query the database to see if the job is finished yet. We are using the same Version from the original Custom Resource to make sure we can query the same item in the table.

import { CloudFormationCustomResourceEvent } from 'aws-lambda';import { PaymentEntity, PaymentStatus } from '../models/payment';export const handler = async ( event: CloudFormationCustomResourceEvent,): Promise<{ Data?: { Result: string }; IsComplete: boolean }> => { if (event.RequestType === 'Delete') { return { IsComplete: true }; } const { Version } = event.ResourceProperties; try { // Query the DynamoDB table to get the meta record. If it has some processed records // and the processed count is equal to validated count, the test passes. const successResponse = (await PaymentEntity.get({ id: `${Version}-success` })).Item || {}; const failureResponse = (await PaymentEntity.get({ id: `${Version}-failure` })).Item || {}; console.log('Success Response: ', successResponse.status); console.log('Failure Response: ', failureResponse.status); const IsComplete = successResponse.status === PaymentStatus.SUCCESS && [PaymentStatus.COLLECTION_FAILURE, PaymentStatus.COLLECTION_SUCCESS].includes(failureResponse.status); return IsComplete ? { Data: { Result: `Payment ${Version}-success finished with status ${successResponse.status} and payment ${Version}-failure finished with status ${failureResponse.status}`, }, IsComplete, } : { IsComplete }; } catch (e) { console.error(e); throw e; }};This function must return an object with IsComplete and a boolean value. If IsComplete is true, then it may also include a Data attribute with a JSON payload. In this case I've defined that as { Result: string }. My intent is to print that string in the console to provide some detail on the test.

Note that we lack the convenience of a nice assertion library, but this example is simple enough to get by with imperative code.

Provider

We need to write a little CDK code to make all this work. In this case, I wrote the entire integration test as a nested stack, which gives it a nice isolation from the actual application. In addition to just organizing our code an potentially avoiding stack limits, this also lets us hedge our best a little by making it easy to disable the nested stack in the event of a test failure blocking a critical deployment.

The stack creates the functions and grants necessary permissions to put events to EventBridge and query DynamoDB. Over the course of the test, we'll see a few events come across EventBridge and also trigger Step Functions. Tests to trigger other asynchronous workflows using SNS or SQS can be done in a similar fashion. Adding the functions to Provider Framework only takes a few lines of code.

const intTestProvider = new Provider(stack, 'IntTestProvider', { isCompleteHandler, logRetention: RetentionDays.ONE_DAY, onEventHandler, totalTimeout: Duration.minutes(1),});this.testResource = new CustomResource(stack, 'IntTestResource', { properties: { Version: new Date().getTime().toString() }, serviceToken: intTestProvider.serviceToken,});We pass in our two handlers to the Provider construct, then create a CustomResource that uses the service token from the Provider. We are also establishing that Version property which will be the current timestamp in milliseconds as a string. This can serve as a unique key, assuming two tests don't kick off in the same millisecond. If we think that might happen, then using some kind of uuid generator would be more appropriate.

It's important that we include some kind of unique value here because if we don't then update events will not detect any change and will not run our test! Setting some kind of unique value here is critical.

Finally the testResource is stored as a member of the IntegrationTestStack so that we can access the output and print it to the console from the main stack.

new CfnOutput(this, 'IntTestResult', { value: intTestStack.testResource.getAttString('Result') });Test Run

With all that done, we can now deploy our application as normal, whether that's a cdk deploy from our laptop or something more sophisticated like a CI/CD pipeline.

Outputs:payments-app-stack.IntTestResult = Payment 1631477097557-success finished with status SUCCESS and payment 1631477097557-failure finished with status COLLECTION_FAILUREWe can check out the visualization of a few step function runs.

Bonus: EventBridgeWebSocket

I didn't want to pass up the advantage to give a quick look to David Boyne's EventBridgeWebSocket construct. With just a few lines of code, I'm able to actively monitor EventBridge traffic while my test runs!

new EventBridgeWebSocket(stack, 'sockets', { bus: eventBus.eventBusName, eventPattern: { source: ['payments'], }, stage: 'dev',});There's really nothing to this. I just have to provide the bus name and an optional pattern. Now using websocat, I get output like this:

% websocat wss://MY_API_ID.execute-api.us-east-1.amazonaws.com/dev{"version":"0","id":"e8d8989a-c870-85b2-9f56-aa7867701eae","detail-type":"success","source":"payments","account":"MY_ACCOUNT_ID","time":"2021-09-12T20:22:03Z","region":"us-east-1","resources":[],"detail":{"id":"1631478043585-success"}}{"version":"0","id":"34f6d36b-0cda-65e2-9851-194a9c1be01d","detail-type":"failure","source":"payments","account":"MY_ACCOUNT_ID","time":"2021-09-12T20:22:04Z","region":"us-east-1","resources":[],"detail":{"id":"1631478043585-failure"}}{"version":"0","id":"8444d579-c553-4c91-e07a-9de3f9cc17c0","detail-type":"collections","source":"payments","account":"MY_ACCOUNT_ID","time":"2021-09-12T20:22:06Z","region":"us-east-1","resources":[],"detail":{"id":"1631478043585-failure"}}This isn't explicitly a testing tool of course, but can be of great help when debugging issues and the cost of entry is amazingly low. Just make sure you don't implement this in production, at least without adding an authorizer to the WebSocketApi.

Infrastructure Testing

Now that we've established Provider Framework as a good basis for testing, are there other applications? Yes! Instead of writing a test against our application, we could use aws-sdk to perform assertions against our infrastructure. This has the same advantages outlined above:

- We don't need external keys to perform these assertions.

- Using

addDependencycan guarantee the test only runs after the resources are created or updated. - If the test fails, the deployment will automatically roll back.

It's worth mentioning that AWS CDK already has an RFC for integration testing so we might end up with something even better. In the meantime, if you are serious about integration testing, time to give AWS CDK and Provider Framework a look!

Next Steps

The weakness of this approach is the imperative coding and lack of convenience methods exposed by libraries like aws-testing-library and sls-test-tools offer. It definitely feels a lot more ergonomic to write expect({...}).toHaveLog(expectedLog) than to query a database and try to test properties on the returned item. I'm not sure if a library like jest is really suited to running in Lambda, but there's definitely room for innovation on a better assertion engine here.

That said, I think this approach is strong enough on its own. I've been using just such a test for about three months now and find it to be very reliable and a good way to guarantee quality delivery.

Original Link: https://dev.to/aws-builders/testing-the-async-cloud-with-aws-cdk-33aj

Dev To

An online community for sharing and discovering great ideas, having debates, and making friends

An online community for sharing and discovering great ideas, having debates, and making friendsMore About this Source Visit Dev To