An Interest In:

Web News this Week

- April 19, 2024

- April 18, 2024

- April 17, 2024

- April 16, 2024

- April 15, 2024

- April 14, 2024

- April 13, 2024

Docker deploy

Our server is finished and almost ready for deploy, which will be done using Docker. Notice that i said it's almost ready, so let's see what is missing. This whole time we used React development server which is listening on port 3000 and redirecting all requests to our backend on port 8080. That makes sense for development since it makes it easier to develop frontend and backend at the same time, as well as debugging frontend React app. But in production we don't need that and it makes much more sense to have only our backend server running and to serve static frontend files to client. So instead of starting React development server using npm start command, we will build optimized frontend files for production, using command npm run build inside of assets/ directory. This will create new directory assets/build/ along with all files needed for production. Now we also have to instruct our backend where to find these files to be able to serve them. That is simply done using command router.Use(static.Serve("/", static.LocalFile("./assets/build", true))). Of course, we want to do that only if server is started in prod environment, so we need to slightly update few files.

First, we will update Parse() function in internal/cli/cli.go to return environment value as a string:

func Parse() string { flag.Usage = usage env := flag.String("env", "dev", `Sets run environment. Possible values are "dev" and "prod"`) flag.Parse() logging.ConfigureLogger(*env) if *env == "prod" { logging.SetGinLogToFile() } return *env}Then we will update Config struct NewConfig() function to be able to receive and set environment value:

type Config struct { Host string Port string DbHost string DbPort string DbName string DbUser string DbPassword string JwtSecret string Env string}func NewConfig(env string) Config { ... return Config{ Host: host, Port: port, DbHost: dbHost, DbPort: dbPort, DbName: dbName, DbUser: dbUser, DbPassword: dbPassword, JwtSecret: jwtSecret, Env: env, }}Now we can update internal/cli/main.go to receive env value form CLI, and send it to new configuration creation which will be used for starting server:

func main() { env := cli.Parse() server.Start(conf.NewConfig(env))}Next thing we have to do is update router to be able to receive configuration argument, and set it to serve static files if started in production mode:

package serverimport ( "net/http" "rgb/internal/conf" "rgb/internal/store" "github.com/gin-contrib/static" "github.com/gin-gonic/gin")func setRouter(cfg conf.Config) *gin.Engine { // Creates default gin router with Logger and Recovery middleware already attached router := gin.Default() // Enables automatic redirection if the current route can't be matched but a // handler for the path with (without) the trailing slash exists. router.RedirectTrailingSlash = true // Serve static files to frontend if server is started in production environment if cfg.Env == "prod" { router.Use(static.Serve("/", static.LocalFile("./assets/build", true))) } // Create API route group api := router.Group("/api") api.Use(customErrors) { api.POST("/signup", gin.Bind(store.User{}), signUp) api.POST("/signin", gin.Bind(store.User{}), signIn) } authorized := api.Group("/") authorized.Use(authorization) { authorized.GET("/posts", indexPosts) authorized.POST("/posts", gin.Bind(store.Post{}), createPost) authorized.PUT("/posts", gin.Bind(store.Post{}), updatePost) authorized.DELETE("/posts/:id", deletePost) } router.NoRoute(func(ctx *gin.Context) { ctx.JSON(http.StatusNotFound, gin.H{}) }) return router}Last line needing update is in migrations/main.go file. Just change

store.SetDBConnection(database.NewDBOptions(conf.NewConfig()))to

store.SetDBConnection(database.NewDBOptions(conf.NewConfig("dev")))Actually, that's not last thing. You will also have to update all tests that use configuration and router setup, but that's entirely up to you and it's dependent on what tests you have implemented.

Now everything is ready for Docker deploy. Docker is not in the scope of this guide, so i will not go into details about Dockerfile, .dockerignore and docker-compose.yml contents.

First we will create .dockerignore file in project root directory:

# This file.dockerignore# Git files.git/.gitignore# VS Code config dir.vscode/# Docker configuration filesdocker/# Assets dependencies and built filesassets/build/assets/node_modules/# Log fileslogs/# Built binarycmd/rgb/rgb# ENV file.env# Readme fileREADME.mdNow create new directory docker/ with two files, Dockerfile and docker-compose.yml. Content of Dockerfile will be:

FROM node:16 AS frontendBuilder# set app work dirWORKDIR /rgb# copy assets files to the containerCOPY assets/ .# set assets/ as work dir to build frontend static filesWORKDIR /rgb/assetsRUN npm installRUN npm run buildFROM golang:1.16.3 AS backendBuilder# set app work dirWORKDIR /go/src/rgb# copy all files to the containerCOPY . .# build app executableRUN CGO_ENABLED=0 GOOS=linux go build -o cmd/rgb/rgb cmd/rgb/main.go# build migrations executableRUN CGO_ENABLED=0 GOOS=linux go build -o migrations/migrations migrations/*.goFROM alpine:3.14# Create a group and user deployRUN addgroup -S deploy && adduser -S deploy -G deployARG ROOT_DIR=/home/deploy/rgbWORKDIR ${ROOT_DIR}RUN chown deploy:deploy ${ROOT_DIR}# copy static assets file from frontend buildCOPY --from=frontendBuilder --chown=deploy:deploy /rgb/build ./assets/build# copy app and migrations executables from backend builderCOPY --from=backendBuilder --chown=deploy:deploy /go/src/rgb/migrations/migrations ./migrations/COPY --from=backendBuilder --chown=deploy:deploy /go/src/rgb/cmd/rgb/rgb .# set user deploy as current userUSER deploy# start appCMD [ "./rgb", "-env", "prod" ]And content of docker-compose.yml is:

version: "3"services: rgb: image: kramat/rgb env_file: - ../.env environment: RGB_DB_HOST: db depends_on: - db ports: - ${RGB_PORT}:${RGB_PORT} db: image: postgres environment: POSTGRES_USER: ${RGB_DB_USER} POSTGRES_PASSWORD: ${RGB_DB_PASSWORD} POSTGRES_DB: ${RGB_DB_NAME} ports: - ${RGB_DB_PORT}:${RGB_DB_PORT} volumes: - postgresql:/var/lib/postgresql/rgb - postgresql_data:/var/lib/postgresql/rgb/datavolumes: postgresql: {} postgresql_data: {}All files required for Docker deploy are now ready, so let's see how to build Docker image and deploy it. First we will pull postgres image from official Docker containers repository:

docker pull postgresNext step is to build rgb image. Inside of project root directory run (change DOCKER_ID with your own docker ID):

docker build -t DOCKER_ID/rgb -f docker/Dockerfile .To create rgb and db containers with resources, run:

cd docker/docker-compose up -dThat will start both containers, and you can check their status by running docker ps. Finally, we need to run migrations. Open shell in rgb container by running:

docker-compose run --rm rgb shInside of container we are able to run migrations same as before:

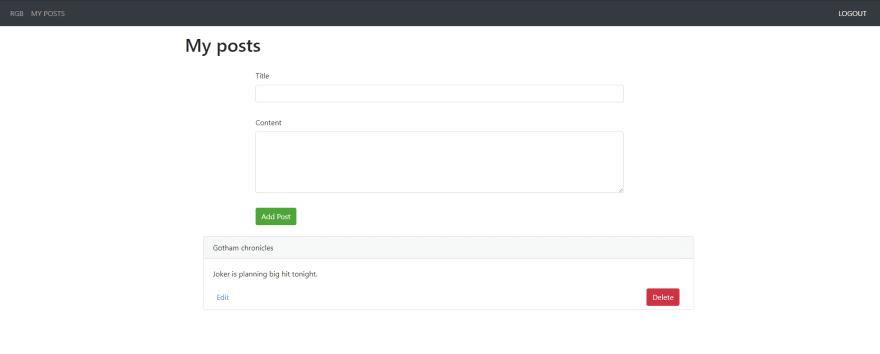

cd migrations/./migrations init./migrations upAnd we are finished. You can open localhost:8080 in your browser to check that everything is working as it should, which means you should be able to create account and add new post:

It was quite long for a simple web app, but we have completed this guide. Hopefully it was useful to some of you. If you have any questions, remarks, or you have found any issues, feel free to contact me in comments. Good luck to everyone and happy coding :)

Original Link: https://dev.to/matijakrajnik/docker-deploy-1aa7

Dev To

An online community for sharing and discovering great ideas, having debates, and making friends

An online community for sharing and discovering great ideas, having debates, and making friendsMore About this Source Visit Dev To