An Interest In:

Web News this Week

- April 24, 2024

- April 23, 2024

- April 22, 2024

- April 21, 2024

- April 20, 2024

- April 19, 2024

- April 18, 2024

Web Scalability for Beginners

Introduction

Almost every new application starts out its life under very simple circumstances. The beginning means few users (mostly in the hundreds, rarely thousands), low traffic, few transactions, a small amount of data to process, and oftentimes limited clients ( at first most apps have just a web portal and no mobile or desktop clients at all). However, the number of users starts to grow, traffic spikes, transactions increase across the system, and user needs might prompt the requirement of mobile, desktop, and (in rare cases) IoT clients to be served.

In this post, we take a look at the requirements that arise from growing pressure on an application. We will go over the effects of this rising pressure on the system's performance, and explore how to respond to it.

What does it mean for a system to scale?

Scalability can be defined as a system's ability to adjust in a cost-effective manner in order to fulfill the demands of that system. This "cost" is not always monetary - it can include time investment, the amount of maintenance required (automation versus manual), as well as the human resources needed to keep the system running successfully.

A system needs to be able to grow to adequately handle more users, process more data, and handle more transactions or client requests without affecting the overall user experience. A well-scaled system should also allow scaling down. Although scaling down is mostly less important than scaling up, it is important to save costs and not use more than what is required.

The scaling setup should also be relatively cheap and quick to do, thus it is advised to take advantage of the work already done by cloud providers and IaaS platforms.

Sources of scalability issues

There are so many areas in a system's infrastructure and codebase where scalability issues can arise. However, most scalability issues can be categorized into these 3 areas:

1) Ability to serve more users

As users of an application increase, more pressure is put on the system to serve each user accordingly. Users operate on the software in isolation from one another, which makes it difficult for them to hear the excuse that other users are causing their experience to worsen. A properly scaled application should be ready to handle an increasing number of concurrent users using the application without affecting each user's experience.

For the system's infrastructure, higher concurrency means more open connections, more active threads, more CPU context switches, and more messages being processed at the same time.

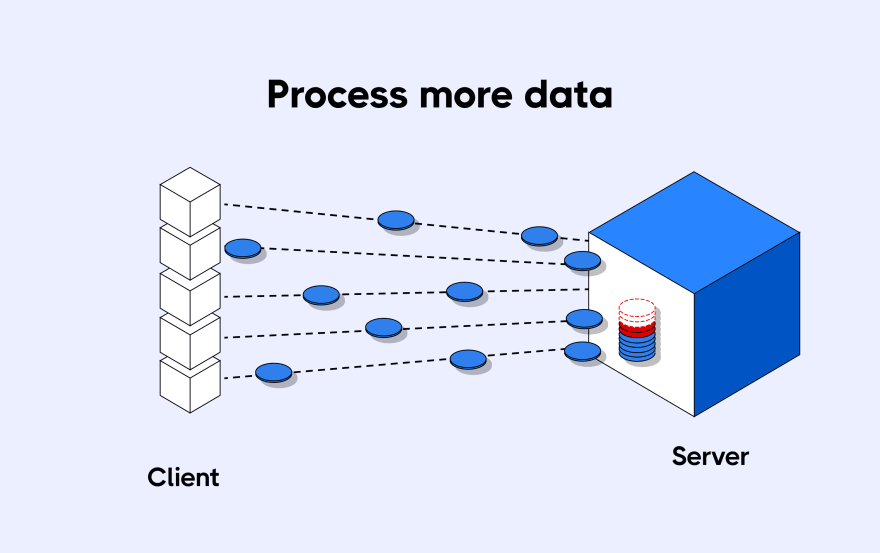

2) Ability to process more data

Imagine an invoice system that needs to calculate the gross total amount for the sales in a year for a product. At an average of a thousand sales a year, the system handles this fine. Suddenly, the product becomes popular due to a boost in ad campaigns and now the system has to process hundreds of thousands of sales in a single year. Such a scenario would put a lot of pressure on the logic in the system that has to calculate the total gross amount for a year's sales. The process would take a longer time to complete and the system could easily run out of memory and terminate the process.

Also, read and write operations on the database increase and can even get worse if queries are not written to efficiently search, sort, and fetch data.

Then, there is data required to be sent over the network to clients. This can easily run down the bandwidth if not effectively handled. Clients, especially mobile clients, have limited memory to store data received from servers and therefore certain clients will need specific considerations based on the amount of data they can handle.

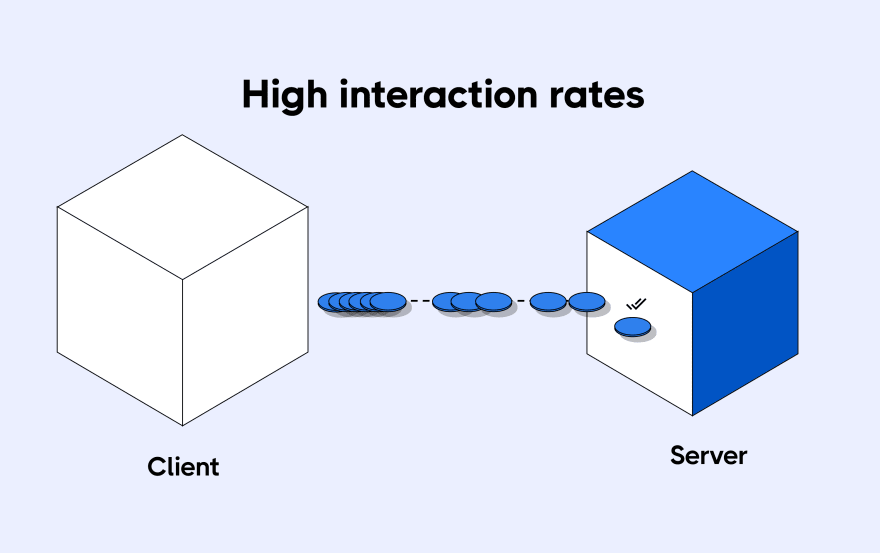

3) Ability to handle high interaction rates

Clients of applications like e-commerce sites make a fair amount of requests to their backend servers. For some of these low-interaction sites, the difference in time between consecutive requests can be as much as 10 to 100 seconds. However, imagine an online multi-player gaming application or stock exchange application, which are types of applications that can make hundreds of requests to their backend servers within a few seconds.

For the high interaction sites described above, latency is a very huge factor in their performance. Such apps cannot afford microseconds of delay as users have to make quick decisions based on the real-time state of the application.

Some user interactions can fire up to 5 requests to the server and as interactions increase, the server needs to be scaled to respond faster.

Different types of scaling strategies

Scalability is a huge topic and is covered in so many books, seminars, video courses, and articles. There are even books that are dedicated to just one scaling strategy specific to a certain scenario. To be clear, no single scaling strategy solves all scalability problems there are as many strategies as issues themselves.

There are, however, certain industry-standard scalability strategies that help solve a lot of the common, but in no way simple, scalability issues. These strategies also have varying degrees of complexity and requirements, both in terms of technology and human resources.

Let's take a look at some of these strategies.

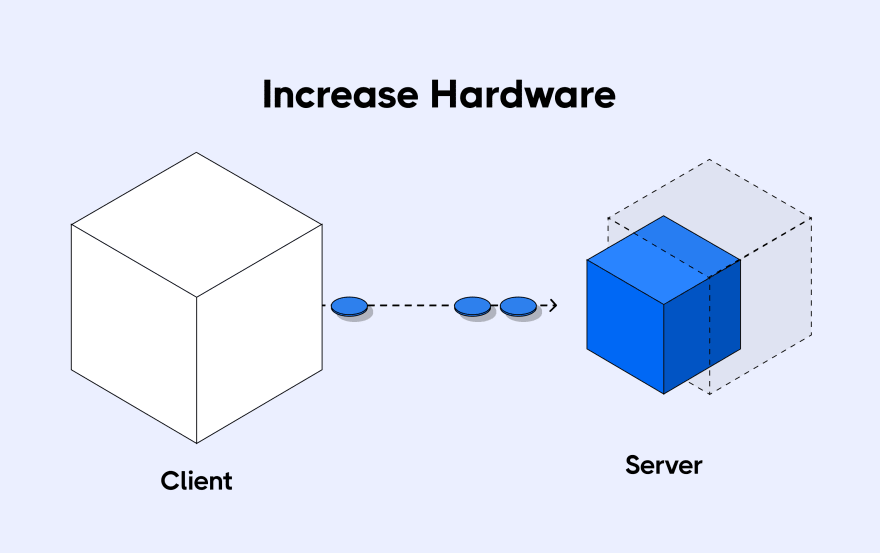

Increasing hardware capabilities

This is often referred to as vertical scaling. You can scale up your servers by adding more memory to expand the amount of data the software process can hold, and increasing the number of CPU for more processing threads and distributed processing with faster context switches. You can also add more hard disk for more data space or replace hard disk drives (HDD) with solid-state drives (SSD) for faster reads and writes.

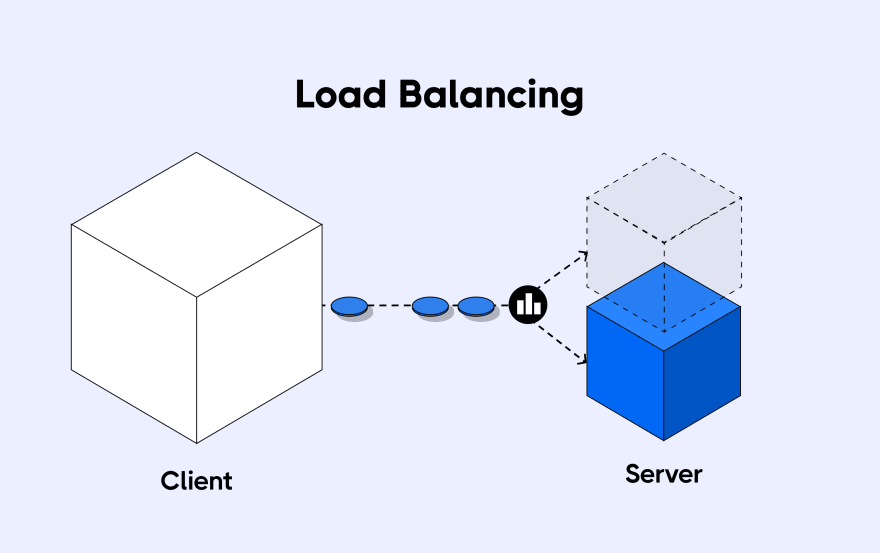

Load balancing

This is often referred to as horizontal scaling, and is considered more effective for handling concurrency and network traffic issues than vertical scaling. This strategy makes use of a proxy server known as a load balancer to distribute client requests to numerous instances of the backend service. This helps distribute traffic to multiple machines and avoid overloading a single one. Traffic can be distributed evenly (round-robin) or based on the current amount of load each instance is handling.

This strategy helps DevOps engineers to quickly scale up for traffic spikes by deploying more instances of the backend, and scale down by removing instances from the server pool when traffic reduces.

CDN

Content delivery networks (CDNs) help solve latency issues by providing highly available and performant proxy servers for your static content. These servers are geographically distributed to serve users based on their proximity to the servers and data centers.

CDNs also help with bandwidth usage, as the bandwidth for the content fetched from them does not affect that of your servers. Latency for static content is also highly reduced.

CDNs are mostly operated by companies like Cloudflare with huge data centers and wide network coverage.

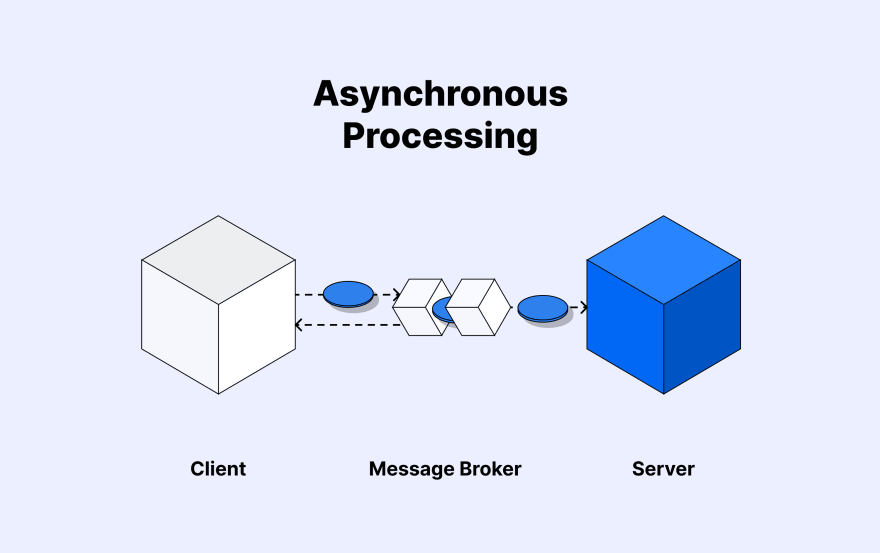

Asynchronous processing

Most server transactions follow a request/response synchronous process. This means that the client has to wait for the server to respond and the server also has to complete a requested task and send a response before continuing with other tasks. These days, with microservices and service-oriented architectures and the need to handle long-running tasks, the client cannot afford to (always) wait for a response from the server before performing other tasks. This has brought about the introduction of systems like message queues and pub/sub systems to allow the clients to carry on with other activities while the server processes tasks, and also for inter-process communication between servers.

This gives rise to highly responsive applications that are decoupled and can easily adjust to system demands.

GeoDNS

Latency increases when a request has to make a lot of network hops around servers across the world before it reaches the server that is to process it. GeoDNS gives a spatial advantage by allowing users to communicate with the servers closest to them.

With GeoDNS, you can map multiple IP addresses representing different servers to a single domain name so that users in an area are served by the server closest to them. This greatly reduces latency as few network hops need to be made and clients receive responses faster.

Caching

Caching encourages re-usability in data-intensive applications. Imagine having to make a network request to fetch your profile every time you visit your profile page on Facebook. Data such as profile information rarely changes, thus it is wise to reuse the piece of information fetched the first time it was loaded and only update it when the user makes an update to their profile.

Caching helps drastically reduce the number of requests the client makes to the server and also improves speed, as cached information is faster to retrieve than trying to fetch it from the database.

Sharding

Remember the gross amount of total sales problem described above? Sharding is one of the strategies that can help with that.

Sharding allows you to split a single dataset into multiple databases and process them individually. Instead of having all sales records in a single database, you can split each month's sale into its own database, process them individually and then combine the results to arrive at your gross calculation.

Distributing the data across multiple machines creates a cluster of database systems that can store larger datasets and handle more requests.

Conclusion

Scalability issues are inevitable for any software application that desires growth and most times, scalability issues arrive suddenly. Not planning for these issues can have undesired consequences, which makes it important for software companies, especially startups, to be one step ahead of scalability issues to ensure that they do not lose users they have worked so hard to get.

Original Link: https://dev.to/hookdeck/web-scalability-for-beginners-2i8c

Dev To

An online community for sharing and discovering great ideas, having debates, and making friends

An online community for sharing and discovering great ideas, having debates, and making friendsMore About this Source Visit Dev To