An Interest In:

Web News this Week

- April 1, 2024

- March 31, 2024

- March 30, 2024

- March 29, 2024

- March 28, 2024

- March 27, 2024

- March 26, 2024

Face Detection And Analysis Using AWS Rekognition Service

Why Use Face Detection?

I was working on a project which requires applicants to upload their passport photographs. Very simple task right? I was using Nodejs for the project so I used the express-fileupload npm package and AWS S3 to upload the passport photographs to a particular bucket on S3. Along the line, I realized that people could upload pictures of cats and rats as profile pictures.

I had very little background in data science and I am not a machine learning expert. So I decided to explore possible solutions. Cloudinary Face Detection APIs sounded nice but I decided to go with AWS Rekognition and I achieved the result I wanted. So let us dive into how that was implemented.

Getting Started

Step 1: Install the express-fileupload package and configure it in your express configurations. Then install node-rekognition package which will be used in your route.

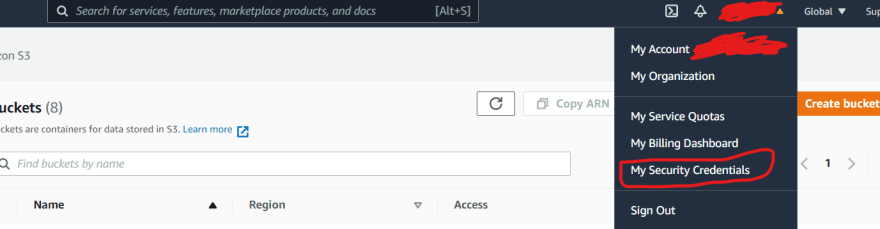

npm install node-rekognition express-fileuploadStep 2: Get your accessKeyId and secretAccessKey from aws s3

Create A New Access Key if you don't have one before. Add the access key id and secret access key to your environmental variables in your Nodejs Project

Setting Up AWS parameters

const Rekognition = require('node-rekognition')const ID = process.env.accessKeyIdconst SECRET = process.env.secretAccessKeyconst BUCKET_NAME = process.env.BUCKET_NAME // The bucket name you are saving your image toconst REGION = process.env.REGION // The region where the s3 bucket exists inconst AWSRekognitionParams = { "accessKeyId": ID, "secretAccessKey": SECRET, "region": REGION, "bucket": REGION, "ACL": 'public-read'}const rekognition = new Rekognition(AWSRekognitionParams)Detecting Face, Face Clarity, and Face Position

And Example Response from Rekognition is the following

{ "FaceDetails": [ { "BoundingBox": { "Width": 0.1845039427280426, "Height": 0.3602786660194397, "Left": 0.4228478670120239, "Top": 0.23032832145690918 }, "Landmarks": [ { "Type": "eyeLeft", "X": 0.4736528992652893, "Y": 0.3472210466861725 }, { "Type": "eyeRight", "X": 0.5531499981880188, "Y": 0.3597199618816376 }, { "Type": "mouthLeft", "X": 0.47176629304885864, "Y": 0.46856561303138733 }, { "Type": "mouthRight", "X": 0.5382513999938965, "Y": 0.47889336943626404 }, { "Type": "nose", "X": 0.5135499835014343, "Y": 0.415280282497406 } ], "Pose": { "Roll": 5.678436279296875, "Yaw": 4.739815711975098, "Pitch": 11.44533634185791 }, "Quality": { "Brightness": 86.91543579101562, "Sharpness": 89.85481262207031 }, "Confidence": 99.9940414428711 } ]}Rekognition returns a FaceDetails attribute which is an array of objects. Each Object contains data of the faces that were detected. In my case, only one face is allowed to be in the image. Hence FaceDetails array I am expecting from Rekognition response should only have one object. Each object also comes with attributes like BoundingBox, Landmarks, Pose, Quality, and Confidence which all describes the detected images.

To get how clear the picture is, I used the Brightness and Sharpness attribute of Quality to determine that. For the Direction that the Face is facing I used the Pose attribute of the Image Object.

Yaw is the y-axis of the detected face.

Pitch is the x-axis of the detected face.

Roll is the z-axis of the detected face.

To describe the 3D rotation of Images Mathematically

R = R()R()R()

Yaw is [-, ]

Pitch is [-/2, /2]

Roll is [-, ]

where radians to degrees is 180 and /2 radians is 90 so to simplify it

Yaw is between [-180, 180]

Pitch is between [-90, 90]

Roll is between [-180, 180]

An image that has its Yaw, Pitch, and Roll close to 0 whether negative or positive definitely has a straight face but let us assume that for a passport photograph the image should not rotate more than 20 for the three cases.

So writing the code to explain the above explanations.

In Your route file

router.post("/upload", async (req, res) => { try { const FaceDegree = 20.0; const imageFaces = await rekognition.detectFaces(req.files['passport_photo'].data) if(!imageFaces.hasOwnProperty('FaceDetails') || !imageFaces.FaceDetails.length) { return res.status(422).json({message: 'Please Upload a passport photograph that has your face on it'}) } /* req.files contain the files uploaded and the key of the file I am sending to this route is passport_upload */ if(imageFaces.FaceDetails.length > 1) return res.status(422).json({message: "Please upload a passport photograph with only your face on it"}) let FaceDetails = imageFaces.FaceDetails[0]; let Pose = FaceDetails.Pose let Yaw = Pose.Yaw let Pitch = Pose.Pitch let Roll = Pose.Roll let Quality = FaceDetails.Quality let Brightness = Quality.Brightness if(Yaw < -FaceDegree || Yaw > FaceDegree || Pitch < -FaceDegree || Pitch > FaceDegree || Roll < -FaceDegree || Roll > FaceDegree) return res.status(422).json({message: "Please Upload a passport photograph with good face positioning"}) // Now you can go ahead and upload the passport photograph to wherever you want it to go. } catch(err) { return res.status(422).json(err) }Conclusion

In conclusion, we can now detect faces in pictures uploaded, get the clarity of the faces and then determine the directions of the faces. You can check the pricing of this service on AWS Rekognition - Pricing Page

Thank you, May The Codes Be With Us.

Original Link: https://dev.to/10xdev/face-detection-and-analysis-using-aws-rekognition-service-2k1g

Dev To

An online community for sharing and discovering great ideas, having debates, and making friends

An online community for sharing and discovering great ideas, having debates, and making friendsMore About this Source Visit Dev To