An Interest In:

Web News this Week

- April 1, 2024

- March 31, 2024

- March 30, 2024

- March 29, 2024

- March 28, 2024

- March 27, 2024

- March 26, 2024

Upload a file larger than RAM size in Go

In recent years, more and more single board computers (SBCs) that have the similar processing power to those giant PC 10 - 12 years ago emerge from the market. It is not hard to find a SBC that cost around 10USD with 1000Mbps Ethernet connection and multi core CPU.

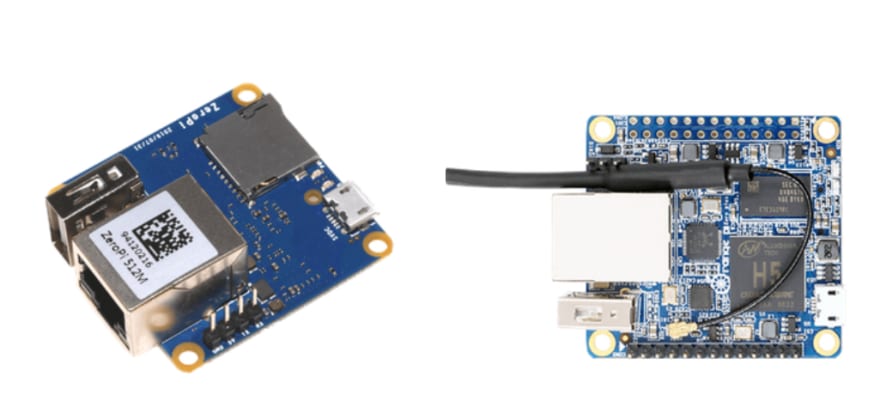

Here are two of the boards that I have been using with 1000Mbps Ethernet: The ZeroPi and the OrangePi Zero Plus

So, is it possible to make a NAS out of it?

To act as a file server, it is completely fine with

- CPU: H3 / h5 CPU @ 1.3Ghz (OK for File Server)

- 1000Mbps Ethernet connection

- USB2.0 port (For USB thumb drive, still ok with a well written caching algorithm & fast SD card)

- 40 x 42cm in size

- Only takes 5v 0.2 - 0.3A, super power efficient

and I have been doing it since 2018, but there is a catch...

Problem: It only got 512MB of RAM!

Even the cheapest model from Synology features more RAM than 512MB. So here comes the question:

Is there anyway we could make good use of this and allow uploading files that is larger than this ram size?

Upload under Normal Condition

Let say you are running your Go program on top of Armbian on ZeroPi (512MB RAM). If you are following the tutorial online, you could see this kind of file upload handler code for Go everywhere.

r.ParseMultipartForm(10 << 20) file, handler, err := r.FormFile("myFile") if err != nil { fmt.Println("Error Retrieving the File") fmt.Println(err) return}defer file.Close()//Then you copy the file to the destination.Under normal situation, this works perfectly fine. When you upload a file, the multipart form data will be buffered to ram. After the file.close(), GC will come and clear out the uploaded file from RAM.

However, this only works as soon as you have enough RAM and page for storing the file in memory. If you try the same thing on a system with so little RAM, you will see something like this:

and your program get killed by the OS.

Let us take a look at how

parseMultipartForm

works. In simple words, it works like this:

- Your browser generate a HTTP FORM and put the file content into the form

- The browser send your FORM with one or multiple request

- Because the file is too big, the browser break down the HTTP REQUEST into multiple chunks, and those are what named "Multipart Form".

- Go (http library) collect those requests and buffer them to the RAM. (In Debian, they all goes into /tmp)

- If your file is larger than your RAM size, tmp get fulled and the OS start reporting "no space left on device" error

- Golang continue to write to it anyway and finally get killed by the OS.

To solve this issue, we have two solutions here.

- Write a new request handling library

- Do not use parseMultipartForm

As 1 is too trouble for me, so I decided to go with the 2nd approach.

WebSocket come to the rescue!

Although many of you think WebSocket can be used as chatroom or online game which in most case send text based data, it can also be used to send binary. So, the new idea is simple:

Break the file into chunks and send them to server using WebSocket

Yes, it is that simple. In the following example, I choose the use the same chunk size as the Hadoop DFS (aka 4MB per chunk) and upload to the Golang server endpoint.

First, you create a socket connection and a function for sending Chunk with given id.

let socket = new WebSocket("/api/upload");let currentSendingIndex = 0;let chunks = Math.ceil(file.size/uploadFileChunkSize,uploadFileChunkSize);//Send a given chunk by its idfunction sendChunk(id){ var offsetStart = id*uploadFileChunkSize; var offsetEnd = id*uploadFileChunkSize + uploadFileChunkSize; var thisblob = file.slice(offsetStart,offsetEnd); socket.send(thisblob); //Update progress to first percentage var progress = id / (chunks-1) * 100.0; if (progress > 100){ progress = 100; } console.log("Progress (%): ", progress)}Then, there is one questions left.

Is socket.Send() async function?

The answer is yes. So, we cannot just put the whole sendChunk login inside a for loop. We need to get a respond from the server side in order to send the next one. The idea is:

- Wait for the socket to open

- Send the 0 chunk (Programmers count from 0 :) )

- Wait for the server to response "next"

- On socket receive "next", send the next chunk

- Repeat step 3 until all chunks has been sent

- Sent "done" to the server and tell the server to start merging the file chunks

- Wait for the server to return "ok" as merging finish

- Close the socket connection.

If you implement that in JavaScript, it looks something like this:

//Start sendingsocket.onopen = function(e) { //Send the first chunk sendChunk(0); currentSendingIndex++;};//On Server -> Client messagesocket.onmessage = function(event) { //Append to the send index var incomingValue = event.data; if (incomingValue == "next"){ if (currentSendingIndex == chunks + 1){ //Already finished socket.send("done"); }else{ //Send next chunk sendChunk(currentSendingIndex); currentSendingIndex++; } }else if (incomingValue == "OK"){ console.log("Upload Completed!"); }}As for the Golang server side, I am using Gorilla WebSocket. You can see more over here: https://github.com/gorilla/websocket

As for the example below, I only show the part of the most important logic.

//Define upload task paramtersuploadFolder := "./upload/" + taskUUIDchunkName := []string{}//Start websocket connectionvar upgrader = websocket.Upgrader{}c, err := upgrader.Upgrade(w, r, nil)defer c.Close()//Listen for incoming blob / stringfor { mt, message, err := c.ReadMessage() if err != nil { //Connection closed by client. Clear the tmp folder and exit log.Println("Upload terminated by client. Cleaning tmp folder.") //Clear the tmp folder time.Sleep(1 * time.Second) os.RemoveAll(uploadFolder) return } //The mt should be 2 = binary for file upload and 1 for control syntax if mt == 1 { msg := strings.TrimSpace(string(message)) if msg == "done" { //Start the merging process log.Println(userinfo.Username + " uploaded a file: " + targetUploadLocation) break } else { //Unknown operations } } else if mt == 2 { //File block. Save it to tmp folder chunkName = append(chunkName, filepath.Join(uploadFolder, "upld_"+strconv.Itoa(blockCounter))) ioutil.WriteFile(filepath.Join(uploadFolder, "upld_"+strconv.Itoa(blockCounter)), message, 0700) blockCounter++ //Update the last upload chunk time lastChunkArrivalTime = time.Now().Unix() //Request client to send the next chunk c.WriteMessage(1, []byte("next")) } //log.Println("recv:", len(message), "type", mt)}//Merge the file, please handle the error yourself!out, _ := os.OpenFile(targetUploadLocation, os.O_CREATE|os.O_WRONLY, 0755)defer out.Close()for _, filesrc := range chunkName { srcChunkReader, err := os.Open(filesrc) if err != nil { log.Println("Failed to open Source Chunk", filesrc, " with error ", err.Error()) c.WriteMessage(1, []byte(`{\"error\":\"Failed to open Source Chunk\"}`)) return } io.Copy(out, srcChunkReader) srcChunkReader.Close()}//Return complete signalc.WriteMessage(1, []byte("OK"))//Clear the tmp folderos.RemoveAll(uploadFolder)//Close WebSocket connection after finishedc.WriteControl(8, []byte{}, time.Now().Add(time.Second))c.Close()Afterward, I update the ArozOS file manager with the new code. Now it works when I try to upload a 1-2G file to a SBC with only 512MB of RAM.

Well of course, as there is no RAM as buffer for the file upload, the max upload speed will be very depending on your SD card write speed. But at least it works right?

Original Link: https://dev.to/tobychui/upload-a-file-larger-than-ram-size-in-go-4m2i

Dev To

An online community for sharing and discovering great ideas, having debates, and making friends

An online community for sharing and discovering great ideas, having debates, and making friendsMore About this Source Visit Dev To