An Interest In:

Web News this Week

- April 25, 2024

- April 24, 2024

- April 23, 2024

- April 22, 2024

- April 21, 2024

- April 20, 2024

- April 19, 2024

Boost your terraform automation

After some time working with CloudFormation to manage infrastructure on AWS, a few months ago I switched jobs and started to work with terraform. I feel that the learning curve was really smooth, despite the differences between these tools.

After using the two, I feel that HCL is great to avoid all the mess one can do with yaml and if you are using multiple cloud services, for example Fastly or Cloudflare for CDN instead of Cloudfront, or running a couple of workloads on different cloud providers such as GCP or Azure, terraform is a great tool to help you manage everything with a unified tool and language.

I was also surprised with the amount of useful tools and resources available to work with terraform (check the Awesome Terraform repository!).

Terraform workflow

The typical terraform workflow might look similar to this:

You start by opening a pull request to a repository that contains the infrastructure code and the first step is to check if the files are well formatted by running terraform fmt --check. After that, you run terraform init to initialize the working directory by downloading the different terraform modules you might be using and initialize the backend, where the status is stored.

The third step is to run terraform plan that outputs the changes that will be done on the infrastructure based on the modifications you did on the code. You can then analyze this output and see what will be modified, created and/or deleted. If you and your peers are ok with the output, you get your pull request approved, merge it to the main branch and go for the terraform apply that will apply the changes. Otherwise, if you are not ok with the output of the plan, you do another commit to fix what is wrong and the workflow starts from the beginning. Pretty straightforward, right?

Apply and... Fail!

However, even if the plan executes successfully and the output seems fine to you and your peers, it is possible that the terraform apply command fails and, since it is not transactional, your infrastructure will be in an inconsistent state until someone applies a patch to fix the problem. The apply command can fail for multiple reasons such as typo on the instance type or missing permissions on an iam role that makes it impossible to do a specific operation.

So, what can we do to reduce the likelihood of failing at the apply step?

In this blog post I will do a brief introduction to some of the tools you can add to your terraform workflow to help you to avoid this problem and, on top of that, add automated checks on security and infrastructure costs.

TFLint

terraform-linters / tflint

terraform-linters / tflint

TFLint is a Terraform linter focused on possible errors, best practices, etc. (Terraform >= 0.12)

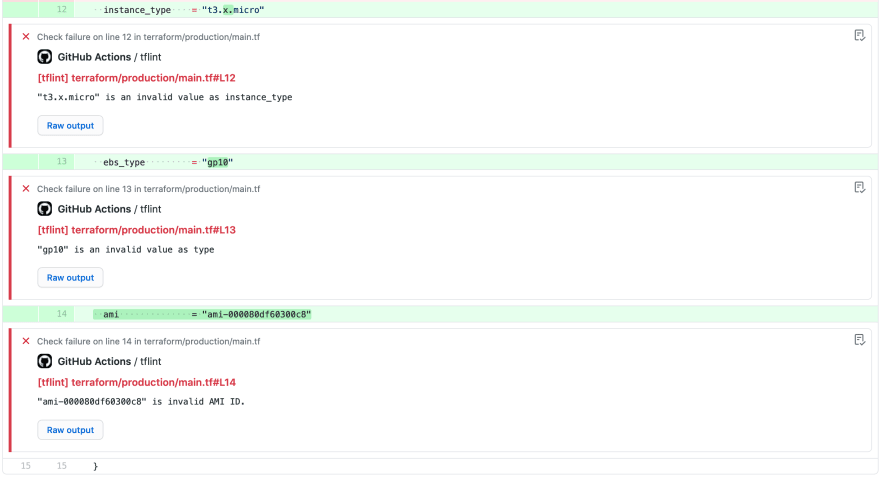

Let's start with TFLint, a static code analysis tool focused on catching possible errors and enforcing best practices. Let's check the following example:

In this pull request, just by simply adding the reviewdog github action for TFLint, I was able to get an automated review reporting that the instance type, the ebs volume type and also the AMI id were invalid. These kind of errors are easy to catch, but also easy to miss in the review process and would fail in the apply, as previously described.

TFLint has more than 700+ rules available and also supports custom rules, for example to enforce patterns in S3 bucket names. It supports AWS, Azure and GCP.

Terratest

gruntwork-io / terratest

gruntwork-io / terratest

Terratest is a Go library that makes it easier to write automated tests for your infrastructure code.

And what if we could actually test the apply step to check if it will run successfully? By running the applystep on a different environment and making automated assertions on the side effects of it, we can also detect problems before running it in the production environment. For this we have Terratest, a Go library to write automated tests for infrastructure code.

localstack / localstack

localstack / localstack

A fully functional local AWS cloud stack. Develop and test your cloud & Serverless apps offline!

The first time I tried Terratest, I used it with Localstack that is an AWS "emulator" you can run locally on your machine or anywhere else. The main reasons for this experiment were to avoid having a different AWS account to run the tests and to be able to run it locally and discard everything easily just by destroying localstack's docker container.

In order to make terraform execute against your localstack's container, you have to add some settings on your provider block, as you can see in the following example. The most important part is the endpoints block that should contain the url for the different AWS services you are interacting with on your terraform code.

provider "aws" { region = "us-east-1" access_key = "mock_access_key" secret_key = "mock_secret_key" skip_credentials_validation = true skip_metadata_api_check = true skip_requesting_account_id = true s3_force_path_style = true endpoints { ec2 = "http://localhost:4566" iam = "http://localhost:4566" s3 = "http://localhost:4566" }}And here's an example of a simple test that checks if the created bucket with the name "awesome-bucket" has versioning enabled:

terraformOptions := &terraform.Options{ TerraformDir: "../../local", EnvVars: map[string]string{ "AWS_REGION": awsRegion, }, }defer terraform.Destroy(t, terraformOptions)terraform.InitAndApply(t, terraformOptions)actualStatus := aws.GetS3BucketVersioning(t, awsRegion, "awesome-bucket")expectedStatus := "Enabled"assert.Equal(t, expectedStatus, actualStatus)Unfortunately, this test does not run successfully with localstack (yet!). The assertion to check if the bucket has versioning enabled ignores the endpoint set on terraform and calls the real aws endpoint, therefore failing the assertion. This issue is actually open on the terratest repository as you can see below:

Assert failing if you are using local configuration for testing (i.e: localstack locally) #494

Assert failing if you are using local configuration for testing (i.e: localstack locally) #494

I'm using localstack locally to test my terraform code:

This is my provider configuration

provider "aws" { access_key = "mock_access_key" region = "eu-west-2" s3_force_path_style = true secret_key = "mock_secret_key" skip_credentials_validation = true skip_metadata_api_check = true skip_requesting_account_id = true endpoints { apigateway = "http://localhost:4567" cloudformation = "http://localhost:4581" cloudwatch = "http://localhost:4582" dynamodb = "http://localhost:4569" es = "http://localhost:4578" firehose = "http://localhost:4573" iam = "http://localhost:4593" kinesis = "http://localhost:4568" lambda = "http://localhost:4574" route53 = "http://localhost:4580" redshift = "http://localhost:4577" s3 = "http://localhost:4572" secretsmanager = "http://localhost:4584" ses = "http://localhost:4579" sns = "http://localhost:4575" sqs = "http://localhost:4576" ssm = "http://localhost:4583" stepfunctions = "http://localhost:4585" sts = "http://localhost:4592" }}I.E: I'm using aws.AssertS3BucketExists method

But I constantly receiving an error about the credentials (In my case I have fake one and receive 403). This mean the aws import dependency is not using the terraform provider configuration and continue using the default aws cli config credentials.

I have checked the code and I realized the module https://github.com/gruntwork-io/terratest/blob/master/modules/aws/auth.go doesn't have the option to use local custom services endpoint

There's already an ongoing fix for this problem, which I tried following the instructions described in detail here, which will basically require you to set again the endepoints on the terratest code like this:

var LocalEndpoints = map[string]string{ "iam": "http://localhost:4566", "s3": "http://localhost:4566", "ec2": "http://localhost:4566",}aws.SetAwsEndpointsOverrides(LocalEndpoints)On top of this "bump on the road", I also had other issues such as some flakiness on the tests because of the container getting stuck during resource creation and, in the end, I felt that I was spending more time dealing with localstack than writing new tests and taking value out of it. I hope that in the future it will be more reliable but for now, if you wish to use terratest to test your infrastructure, I recommend you to run it against a sandbox AWS account where you can run aws-nuke or cloud-nuke once in a while.

Checkov

bridgecrewio / checkov

bridgecrewio / checkov

Prevent cloud misconfigurations during build-time for Terraform, Cloudformation, Kubernetes, Serverless framework and other infrastructure-as-code-languages with Checkov by Bridgecrew.

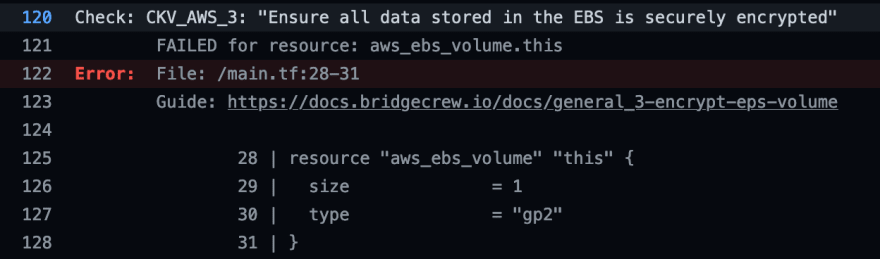

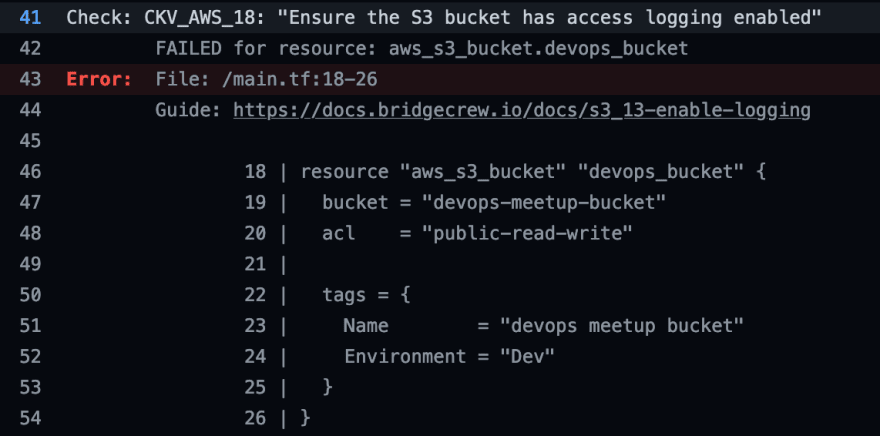

Just like TFLint, Checkov is also a static analysis tool that has more than 400 rules focused on security and compliance best practices for AWS, Azure and Google Cloud.

It can report situations such as the following, where I created an ebs volume without having encryption enabled:

Or this one, where I created an S3 bucket without access logging, that could be handy for further analysis of suspicious assesses to the bucket:

What I enjoy most in this tool is that every issue reported has a url with the rationale, so you can learn why it is important to fix and how to do it. Kudos to BridgeCrew for the great work!

Infracost

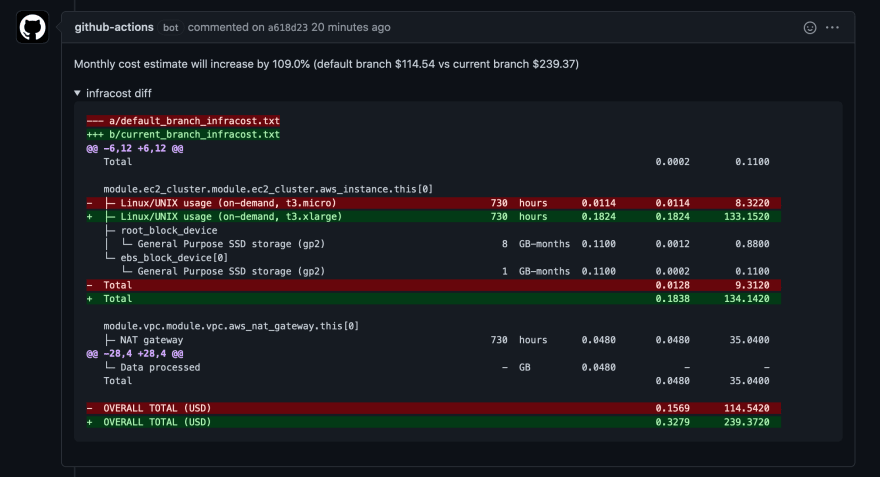

This tool really got me "wow, this is cool" . Basically, Infracost estimates the costs automatically in every pull request, based on the changes you did on your terraform code. How many times you didn't realize that the changes you were doing were going to cost you 2x more in the end of the month? Or you suspected that it would be expensive but you had to jump to AWS Pricing calculator and do the math?

In the following example, I just increased the instance type from t3.micro to t3.xlarge and automatically (powered by this infracost github action), I got a comment on my pull request telling me that the monthly bill would increase from ~114$ to ~239$:

That's it!

If you're interested and want to quickly try these tools, I set up a Github Actions workflow with all of them in this repository, feel free to use it!

bmbferreira / awesome-terraform-pipeline

bmbferreira / awesome-terraform-pipeline

Sample terraform repo with multiple resources and a workflow that uses tools to automate cost management, security checks, best practices, tests, documentation and more!

I hope that you find at least some of these tools useful and please tell me on the comments what other tools are you using in your day-to-day to improve your work with terraform!

Original Link: https://dev.to/bmbferreira/boost-your-terraform-automation-5el4

Dev To

An online community for sharing and discovering great ideas, having debates, and making friends

An online community for sharing and discovering great ideas, having debates, and making friendsMore About this Source Visit Dev To