An Interest In:

Web News this Week

- April 16, 2024

- April 15, 2024

- April 14, 2024

- April 13, 2024

- April 12, 2024

- April 11, 2024

- April 10, 2024

Why Do Most Programming Languages Count From Zero?

This morning I was counting the number of days from an appointment on my calendar. "0, 1, 2, 3..." I muttered under my breath. Good thing I said it out loud, or I wouldn't have caught my mistake. That's what spending hours on array-based JavaScript challenges does for you!

I got a chuckle out of it, but it made me wonder why most programming languages count from zero in the first place. I'd known before and completely forgotten. So join me while I learn some computer science.

First of all, let's clarify-- we're not actually counting from zero, we're indexing from zero. This comment by mowwwalker on stackexchange was enlightening to me:

"Woah, woah, no one counts from zero, we index from zero. No >one says the "zeroth" element. We say the "first" element at >index 0. Think of the index as how far an element is offset >from the first position. Well, the first element is at the >first position, so it's not offset at all, so its index is 0. >The second element as one element before it, so it's offset 1 >element and is at index 1 mowwwalker Apr 5 '13 at 14:32"

I found this comment helpful in adjusting my perspective, but it's not why we count from zero. The answer lies in the approach that influential computer scientists took, like Djikstra.

Edsgar W. Djikstra made monumental contributions to computer science, including algorithms, new concepts, methods, theories, and general areas of research. He was also a proponent of starting from zero.

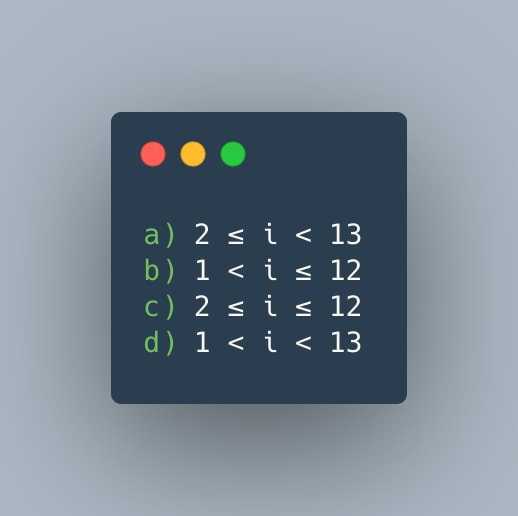

He wrote a short paper on the topic in August of 1982. He started by looking at four different ways to denote natural numbers 2, 3, ..., 12.

In his paper, Djikstra eliminates c) and d) since the they lack the advantage of a) and b). That is, in a) and b) the length of the subsequence is equal to the difference between the bounds (in these cases, 11), and if you had two adjacent subsequences the upper bound of one would equal the lower bound of the other.

For example, in adjacent subsequences 1 < i 13 and 13 < i 23, the upper bound of the first is equal to the lower bound of the second (13).

Ok, but which is better, a) or b)? Djikstra points out that b) excludes the lower bound in its notation. That's inconvenient, since if you started a subsequence at 0, like 0,1,2 then you'd force the notation into using unnatural numbers, like so: -1 < i ...

As Djikstra says, this is 'ugly', so we go with method one.

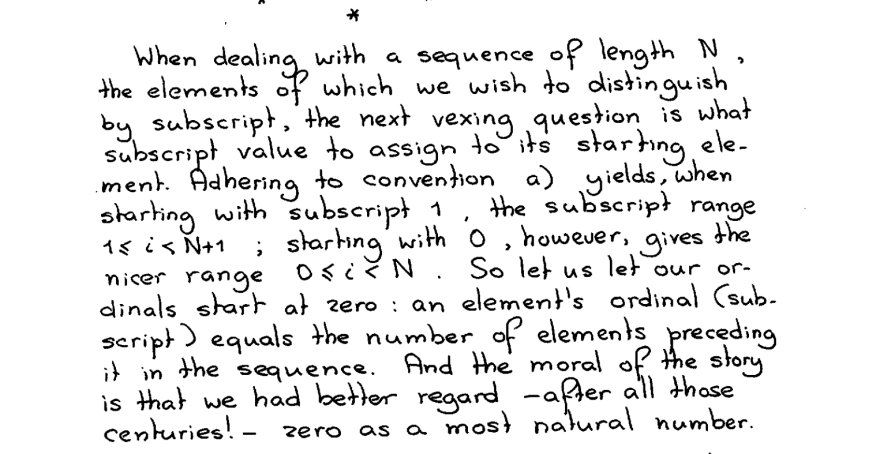

If we're going with method one, then how do we denote the elements "by subscript," or, I believe, like mowwwalker says, how far each element is offset from the first position. Djikstra's answer is simple:

The influence of Djikstra, and, I assume, other computer science giants, now explains why most programming languages start at zero.

My curiosity satisfied, I return to my coffee and ginger cookies on a fine Sunday morning.

Original Link: https://dev.to/cerchie/why-do-computers-count-from-zero-3mh6

Dev To

An online community for sharing and discovering great ideas, having debates, and making friends

An online community for sharing and discovering great ideas, having debates, and making friendsMore About this Source Visit Dev To