An Interest In:

Web News this Week

- April 3, 2024

- April 2, 2024

- April 1, 2024

- March 31, 2024

- March 30, 2024

- March 29, 2024

- March 28, 2024

Natural Language Processing Performance Metrics (Benchmarks)

Natural Language Processing is a very vast field of research and it consists of so many tasks like Machine translation, Question Answering, Text Summarization, Image captioning, Sentiment Analysis, etc. Researchers try to make different machine learning and deep learning models to solve these tasks. The most difficult job in NLP is to measure the performance of these models for different tasks. In other Machine learning tasks, it is easier to measure the performance because the cost function or evaluation criteria are well defined like we can calculate Mean absolute error(MAE) or Mean square error(MSE) for regression, we can calculate accuracy and F1-score for classification tasks. One more reason for this is that in other tasks the labels are well defined but in the case of NLP task, the ground truth or result can be varied. Example : If someone asks "What is your name?" a person can answer this in many different ways like

- My name is Aman

- Sir/Madam my name is Aman

- Aman

or a more elaborate answer like

- I am Aman Anand but you can call me Aman

Similarly, if we want the summary of a paragraph or essay different answers are possible for the same. Different people will write different summaries based on their understanding and linguistic skills(vocabulary and grammar) and in the same way, different models will write different summaries and all of them will be correct.

Also in the case of Natural Language Processing, it is possible that biases creep in models based on the dataset or evaluation criteria. Therefore it is necessary to make Standard Performance Benchmarks to evaluate the performance of models for NLP tasks. These Performance metrics gives us an indication about which model is better for which task.

Some of the famous NLP Performance Benchmarks are listed below:-

GLUE

General Language Understanding Evaluation

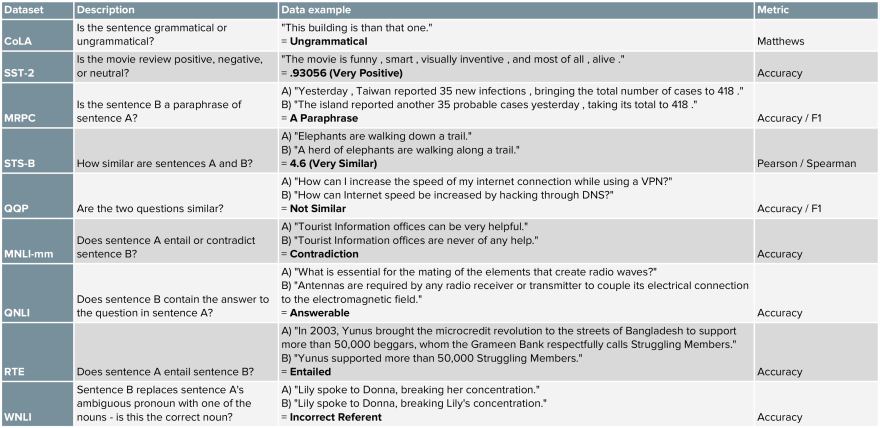

It is a benchmark based on different types of tasks rather than evaluating a single task. The three major categories of tasks are single-sentence tasks, similarity and paraphrase tasks, and inference tasks.

A detail of the different tasks and evaluation metrics is given below.

Out of the 9 tasks mentioned above CoLA and SST-2 are single sentence tasks, MRPC, QQP, STS-B are similarity and paraphrase tasks, and MNLI, QNLI, RTE and WNLI are inference tasks. The different state-of-the-art(SOTA) language models are evaluated on this benchmark.

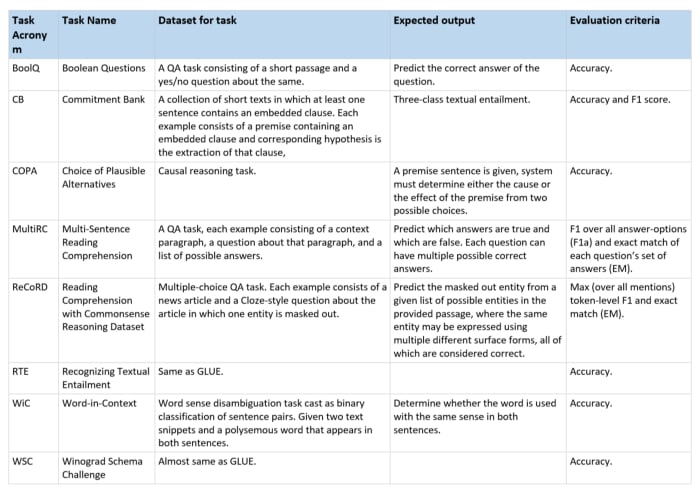

Super GLUE

Super General Language Understanding Evaluation

In this metric 2 of the tasks were kept from the GLUE metric namely RTE and WSC(which is almost same) and six new tasks were added to make a better performance metrics than GLUE. The details of the tasks is given below in the table.

SQuAD

Stanford Question Answering Dataset

It is a reading comprehension dataset with questions created through crowdsourcing. A passage is given and questions are asked based on the passage. The answer to these questions is a segment of text from the passage.

An example from the vast set of passages present in the dataset is

Oxygen is a chemical element with symbol O and atomic number 8. It is a member of the chalcogen group on the periodic table and is a highly reactive nonmetal and oxidizing agent that readily forms compounds (notably oxides) with most elements. By mass, oxygen is the third-most abundant element in the universe, after hydrogen and helium. At standard temperature and pressure, two atoms of the element bind to form dioxygen, a colorless and odorless diatomic gas with the formula O

- Diatomic oxygen gas constitutes 20.8% of the Earth's atmosphere. However, monitoring of atmospheric oxygen levels show a global downward trend, because of fossil-fuel burning. Oxygen is the most abundant element by mass in the Earth's crust as part of oxide compounds such as silicon dioxide, making up almost half of the crust's mass.

The questions asked based on this paragraph are:-

- The atomic number of the periodic table for oxygen?

- What is the second most abundant element?

- Which gas makes up 20.8% of the Earth's atmosphere?

- How many atoms combine to form dioxygen?

- Roughly, how much oxygen makes up the Earth's crust?

This metrics is for a single task unlike the other two metrics mentioned above.

BLEU

BiLingual Evaluation Understudy

It is a performance metric to measure the performance of machine translation models. It evaluates how good a model translates from one language to another. It assigns a score for machine translation based on the unigrams, bigrams or trigrams present in the generated output and comparing it with the ground truth. It has many problems but it was one of the first methods to assign a score to machine translation models. It always gives a score between 0 and 1.

Some of its shortcomings are:

- It doesnt consider meaning

- It doesnt directly consider sentence structure

- It doesnt handle morphologically rich languages

MS MACRO

MAchine Reading COmprehension Dataset

It is a large scale dataset focused on machine reading comprehension. It consists of the following tasks:

- Question answering generate a well-formed answer (if possible) based on the context passages that can be understood with the question and passage context.

- Passage ranking rank a set of retrieved passages given a question.

- Key phrase Extraction predict if a question is answerable given a set of context passages, and extract and synthesize the answer as a human would.

The evaluation of these tasks is done using BLEU and ROGUE(Recall-Oriented Understudy for Gisting Evaluation) metrics.

XTREME

Cross-lingual TRansfer Evaluation of Multilingual Encoders

It is a benchmark developed by Google for the evaluation of 40 languages on 9 different tasks. A representation of the tasks is given below.

The languages supported are

| Family | Languages |

|---|---|

| Afro-Asiatic | Arabic, Hebrew |

| Austro-Asiatic | Vietnamese |

| Austronesian | Indonesian, Javanese, Malay, Tagalog |

| Basque | Basque |

| Dravidian | Malayalam, Tamil, Telugu |

| Indo-European (Indo-Aryan) | Bengali, Marathi, Hindi, Urdu |

| Indo-European (Germanic) | Afrikaans, Dutch, English, German |

| Indo-European (Romance) | French, Italian, Portuguese, Spanish |

| Indo-European (Greek) | Greek |

| Indo-European (Iranian) | Persian |

| Japonic | Japanese |

| Kartvelian | Georgian |

| Koreanic | Korean |

| Kra-Dai | Thai |

| Niger-Congo | Swahili, Yoruba |

| Slavic | Bulgarian, Russian |

| Sino-Tibetan | Burmese, Mandarin |

| Turkic | Kazakh, Turkish |

| Uralic | Estonian, Finnish, Hungarian |

This evaluation dataset and metrics is the most recent one and is used to evaluate SOTA models for cross-lingual tasks and pre-trained models performance for zero-shot learning.

I hope that the readers got a good review of the different NLP benchmarks and how complex they are. Thanks for reading this post.

Original Link: https://dev.to/amananandrai/natural-language-processing-performance-metrics-benchmarks-4jel

Dev To

An online community for sharing and discovering great ideas, having debates, and making friends

An online community for sharing and discovering great ideas, having debates, and making friendsMore About this Source Visit Dev To