An Interest In:

Web News this Week

- March 31, 2024

- March 30, 2024

- March 29, 2024

- March 28, 2024

- March 27, 2024

- March 26, 2024

- March 25, 2024

Writing a neural network in JavaScript (2020)-Intro to neuralnetworks

What is a neuron and a neuralnetwork?

In biology a neuron is a cell that communicates with other cells via specialized connections called synapses.

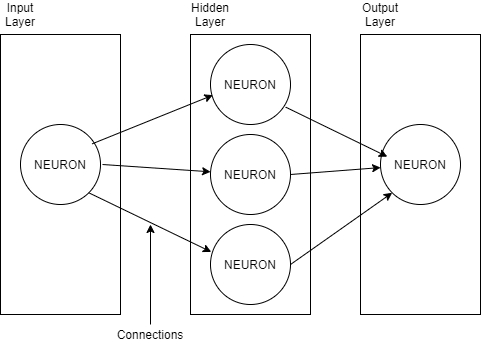

As we can see in the following picture, a neuron has a set of connections with different sizes and shapes.

In software, a neuron (artificial neuron) is a mathematical function conceived as a model of the biological neurons.

Artificial neurons have a set of connections (x1, x2, x3) with a different set of weights (w1, w2, w3).

The neuron itself generates an output (y) when it performs a function (o) with the values from the input connections (x1,x2,x3 w1,w2,w3).

Basically: a neuron is a function that gives a value depending on some input values.

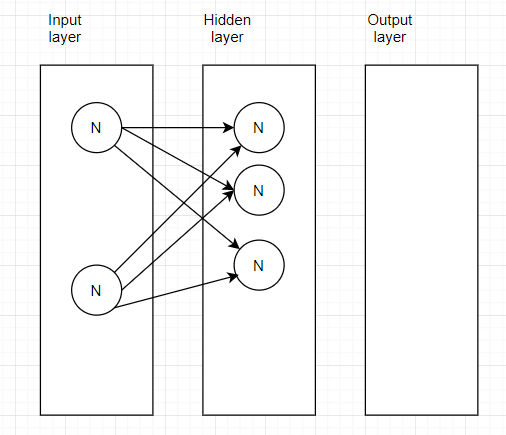

A software artificial neural network is a collection of neurons connected to each other that represent a mathematical function that models something we want to accomplish.

Real-life can be decomposed into math. Imagine you want to write code to identify cats in pictures. This would require you a lot of time and complicated maths. Imagine: decomposing the images in groups of pixels, guessing which characteristics represent a cat, and analyzing if each set of pixels maps one of these characteristics. Sounds complicated.

Here is where neural networks come in hand. Neural networks can be trained to learn how to solve a specific problem.

At the beginning neural networks are a little "random". They are generated with random values and trained against a set of data (dataset). They adjust themselves over and over and learn how to give the expected results. Once a network has been trained to do something it will be able to give predictions with new data that it has never seen before.

So, if you train a network with thousands of cat images it will be able to tell you when you are showing it a cat. But it will not be able to tell you when you are showing it a house. You have trained the network and now the network contains the function (or the code) that model cat characteristics, but nothing else.

One of the best resources for learning about neural networks is the next video by 3blue1Brown

Writing our first neuralnetwork

What we are going to model is this:

We want to create the following entities:

- Neuron: has input connection, output connections and a bias

- Connection: Has a "origin" neuron a "destination" neuron, and a weight.

- Layer: has neurons and an activation function

- Network: has layers

With this simple neural network in JavaScript we will be able to magically auto-program simple logic gates (AND, OR, XOR, etc). This logic gates could be easily programmed with a normal function but we are going to show how a neural network can solve this problems automatically.

With this knowledge you will be able to understand the basics of machine learning and escalate it to other needs.

For more professional needs we advise you to use some solid frameworks like TensorFlow or Pytorch.

Let's go to code our first neural network in JavaScript from the ground.

In this example we will use object oriented programming with ES6 classes and unit tests.

You can find all the code for this tutorial in the following repo: https://github.com/rafinskipg/neural-network-js

Neuron.js

As you can see, most of the code of the neuron is Boilerplate that you can omit (setters, print functions, etc), the only important things are:

- bias

- delta

- output

- error

- connections

import uid from './uid'class Neuron { constructor() { this.inputConnections = [] this.outputConnections = [] this.bias = 0 // delta is used to store a percentage of change in the weight this.delta = 0 this.output = 0 this.error = 0 this.id = uid() } toJSON() { return { id: this.id, delta: this.delta, output: this.output, error: this.error, bias: this.bias, inputConnections: this.inputConnections.map(i => i.toJSON()), outputConnections: this.outputConnections.map(i => i.toJSON()) } } getRandomBias() { const min = -3; const max = 3 return Math.floor(Math.random() * (+max - +min)) +min; } addInputConnection(connection) { this.inputConnections.push(connection) } addOutputConnection(connection) { this.outputConnections.push(connection) } setBias(val) { this.bias = val } setOutput(val) { this.output = val } setDelta(val) { this.delta = val } setError(val) { this.error = val }}export default NeuronConnection

Connections link from one neuron to another neuron. And have a weight.

We will store also the change property to know how much the weight should change between iterations, in the backpropagation phase.

class Connection { constructor(from, to) { this.from = from this.to = to this.weight = Math.random() this.change = 0 } toJSON() { return { change: this.change, weight: this.weight, from: this.from.id, to: this.to.id } } setWeight(w) { this.weight = w } setChange(val) { this.change = val }}export default ConnectionLayer

A layer is just a collection of neurons.

When we do new Layer(5); we are creating a layer with a group of 5 neurons.

import Neuron from './neuron'class Layer { constructor(numberOfNeurons) { const neurons = [] for (var j = 0; j < numberOfNeurons; j++) { const neuron = new Neuron() neurons.push(neuron) } this.neurons = neurons } toJSON() { return this.neurons.map(n => { return n.toJSON() }) }}export default LayerSimple for now. Right?

Let's do a quick recap: we just have 3 different concepts or classes for now, that we can use in a simple way like this:

var myLayer = new Layer(5); // create a layer of 5 neurons// Create a connectionvar connection = new Connection(myLayer.neurons[0], myLayer.neurons[1])// Store references to the connection in the neuronsmyLayer.neurons[0].addOutputConnection(connection)myLayer.neurons[1].addInputConnection(connection)Basically, to create a network, we just need different layers, with different neurons each, and different connections with weights.

To model this you can use another abstraction, you don't have to follow the one I did. For example, you could make just a matrix of objects and store all the data without using classes. I used OOP because it's easier for me to learn new concepts that I can model easily.

The network

There are some things we should understand before we create our network (group of layers).

1-We need to create various layers

2-The input layer neurons have no input connections, only output

3-The output layer neurons have no output connections, only input

4-All neurons are created with a random Bias value. Except the ones in the input layer which will have the Input values. Input values are the data we are going to use to give a prediction or a result. For example, in an image of 28x28 it would be 784 pixels of data. In a logic gate it will be 2 values (0 or 1).

5-In each training step we will provide some values to the Input layer (the training data), then calculate the output and apply Backpropagation to recalculate the weights of the connections.

6-Backpropagation is a way of adapting the weights of the connections based on the error difference of the desired output versus the real output. After executing it many times the network gives something more close to the expected result. This is training the network.

Before we see all the network code we need to understand how a neuron calculates it's own value in each iteration.

const bias = this.layers[layer].neurons[neuron].bias// For each neuron in this layer we compute its output value, // the output value is obtained from all the connections comming to this neuronconst connectionsValue = this.layers[layer].neurons[neuron].inputConnections.reduce((prev, conn) => { const val = conn.weight * conn.from.output return prev + val}, 0)this.layers[layer].neurons[neuron].setOutput(sigmoid(bias + connectionsValue))We calculate the output of a neuron by adding all the products of weight and output from previous connections. It means, getting all the connections that come to this neuron, for each connection we will multiply the weight and output and add it to the total. Once we have the SUM of all products we will apply a Sigmoid function to normalize the output.

A sigmoid function is a mathematical function having a characteristic "S"-shaped curve or sigmoid curve.

In neural networks sigmoid function is used to normalize the values of a neuron between 0 and 1.

There are different kinds of functions neural networks use, these functions are called activation functions. Some of the most popular activation functions are Sigmoid, Tanh or ReLU.

You can read a more in-depth explanation of activation functions here.

For now we will just use sigmoid function written in JavaScript:

function sigmoid(z) { return 1 / (1 + Math.exp(-z));} export default sigmoidLet's take a look now at the full network code.

There are many things going on in the network:

- The network connects all neurons from one layer to the next one

- When the network is training it runs the

runInputSigmoidmethod, which uses the sigmoid function as an activation function. - Backpropagation is done by calculating the change needed in the weights (delta) and then applying it. The code for calculating weights and deltas is complex.

- The

runmethod just callsrunInputSigmoidto give the results

import sigmoid from './sigmoid'import Connection from './connection'import Layer from './layer'class Network { constructor(numberOfLayers) { // Create a network with a number of layers. For layers different than the input layer we add a random Bias to each neuron this.layers = numberOfLayers.map((length, index) => { const layer = new Layer(length) if (index !== 0 ) { layer.neurons.forEach(neuron => { neuron.setBias(neuron.getRandomBias()) }) } return layer }) this.learningRate = 0.3 // multiply's against the input and the delta then adds to momentum this.momentum = 0.1 // multiply's against the specified "change" then adds to learning rate for change this.iterations = 0 // number of iterations in the training process this.connectLayers() } toJSON() { return { learningRate: this.learningRate, iterations: this.iterations, layers: this.layers.map(l => l.toJSON()) } } setLearningRate(value) { this.learningRate = value } setIterations(val) { this.iterations = val } connectLayers() { // Connects current layer with the previous one. This is for a fully connected network // (each neuron connects with all the neurons from the previous layer) for (var layer = 1; layer < this.layers.length; layer++) { const thisLayer = this.layers[layer] const prevLayer = this.layers[layer - 1] for (var neuron = 0; neuron < prevLayer.neurons.length; neuron++) { for(var neuronInThisLayer = 0; neuronInThisLayer < thisLayer.neurons.length; neuronInThisLayer++) { const connection = new Connection(prevLayer.neurons[neuron], thisLayer.neurons[neuronInThisLayer]) prevLayer.neurons[neuron].addOutputConnection(connection) thisLayer.neurons[neuronInThisLayer].addInputConnection(connection) } } } } // When training we will run this set of functions each time train(input, output) { // Set the input data on the first layer this.activate(input) // Forward propagate this.runInputSigmoid() // backpropagate this.calculateDeltasSigmoid(output) this.adjustWeights() // You can use as a debugger // console.log(this.layers.map(l => l.toJSON())) this.setIterations(this.iterations + 1) } activate(values) { this.layers[0].neurons.forEach((n, i) => { n.setOutput(values[i]) }) } run() { // For now we only use sigmoid function return this.runInputSigmoid() } runInputSigmoid() { for (var layer = 1; layer < this.layers.length; layer++) { for (var neuron = 0; neuron < this.layers[layer].neurons.length; neuron++) { const bias = this.layers[layer].neurons[neuron].bias // For each neuron in this layer we compute its output value, // the output value is obtained from all the connections comming to this neuron const connectionsValue = this.layers[layer].neurons[neuron].inputConnections.reduce((prev, conn) => { const val = conn.weight * conn.from.output return prev + val }, 0) this.layers[layer].neurons[neuron].setOutput(sigmoid(bias + connectionsValue)) } } return this.layers[this.layers.length - 1].neurons.map(n => n.output) } calculateDeltasSigmoid(target) { // calculates the needed change of weights for backpropagation, based on the error rate // It starts in the output layer and goes back to the first layer for (let layer = this.layers.length - 1; layer >= 0; layer--) { const currentLayer = this.layers[layer] for (let neuron = 0; neuron < currentLayer.neurons.length; neuron++) { const currentNeuron = currentLayer.neurons[neuron] let output = currentNeuron.output; let error = 0; if (layer === this.layers.length -1 ) { // Is output layer, // the error is the difference between the expected result and the current output of this neuron error = target[neuron] - output; // console.log('calculate delta, error, last layer', error) } else { // Other than output layer // the error is the sum of all the products of the output connection neurons * the connections weight for (let k = 0; k < currentNeuron.outputConnections.length; k++) { const currentConnection = currentNeuron.outputConnections[k] error += currentConnection.to.delta * currentConnection.weight // console.log('calculate delta, error, inner layer', error) } } currentNeuron.setError(error) currentNeuron.setDelta(error * output * (1 - output)) } } } adjustWeights() { // we start adjusting weights from the output layer back to the input layer for (let layer = 1; layer <= this.layers.length -1; layer++) { const prevLayer = this.layers[layer - 1] const currentLayer = this.layers[layer] for (let neuron = 0; neuron < currentLayer.neurons.length; neuron++) { const currentNeuron = currentLayer.neurons[neuron] let delta = currentNeuron.delta for (let i = 0; i < currentNeuron.inputConnections.length; i++) { const currentConnection = currentNeuron.inputConnections[i] let change = currentConnection.change // The change on the weight of this connection is: // the learningRate * the delta of the neuron * the output of the input neuron + (the connection change * momentum) change = (this.learningRate * delta * currentConnection.from.output) + (this.momentum * change); currentConnection.setChange(change) currentConnection.setWeight(currentConnection.weight + change) } currentNeuron.setBias(currentNeuron.bias + (this.learningRate * delta)) } } }}export default NetworkI am not going to explain why the deltas and weights are calculated with that formula. Backpropagation is a complex topic that requires investigation from your side. Let me give you some resources for your investigation:

- Backpropagation: https://en.wikipedia.org/wiki/Backpropagation

What is backpropagation really doing?

Multi-Layer Neural Networks with Sigmoid Function- Deep Learning for Rookies

With the code for the network you will be able to run backpropagation to train it. But it is important you take your time to elaborate on your thoughts on it.

Writing tests to train ournetwork:

In the example repository you will find different tests that allow to train the network in different ways:

Here is our test for a XOR gate and it will serve as a full example on how to use this network for different purposes.

You can try to train the network for different things and see what happens.

import Network from '../network'// Training data for a xor gateconst trainingData = [{ input : [0,0], output: [0]}, { input : [0,1], output: [1]}, { input : [1,0], output: [1]}, { input : [1,1], output: [0]}]describe('XOR Gate', () => { let network beforeAll(done => { // Create the network network = new Network([2, 10, 10, 1]) // Set a learning rate const learningRate = 0.3 network.setLearningRate(learningRate) // Train the network for(var i = 0; i < 20000 ; i ++) { const trainingItem = trainingData[Math.floor((Math.random()*trainingData.length))] // Randomly train network.train(trainingItem.input, trainingItem.output); } done() }) it('should return 0 for a [0,0] input', () => { network.activate([0, 0]) const result = network.runInputSigmoid() expect(Math.round(result[0])).toEqual(0) }) it('should return 1 for a [0,1] input', () => { network.activate([0, 1]) const result = network.runInputSigmoid() expect(Math.round(result[0])).toEqual(1) }) it('should return 1 for a [1,0] input', () => { network.activate([1, 0]) const result = network.runInputSigmoid() expect(Math.round(result[0])).toEqual(1) }) it('should return 0 for a [1,1] input', () => { network.activate([1, 1]) const result = network.runInputSigmoid() expect(Math.round(result[0])).toEqual(0) })})If you want to do things that require GPU usage for training (more computational strength) or more complex layers you might need to use a more advanced library like:

But remember, you just coded a neural network, now you know how to dive into them!

Original Link: https://dev.to/rafinskipg/writing-a-neural-network-in-javascript-2020-intro-to-neural-networks-2c4n

Dev To

An online community for sharing and discovering great ideas, having debates, and making friends

An online community for sharing and discovering great ideas, having debates, and making friendsMore About this Source Visit Dev To