An Interest In:

Web News this Week

- April 20, 2024

- April 19, 2024

- April 18, 2024

- April 17, 2024

- April 16, 2024

- April 15, 2024

- April 14, 2024

Exploring Language, Sarcasm, and Bias in Artificial Intelligence

How did a virtual voice assistant learn to be so sly?

The answer is Natural Language Processing, a subcategory of artificial intelligence.

Sounds futuristic, yet Natural Language Processing is already deeply integrated into our lives. You'll find it in chatbots, search engines, voice-to-text, and something you may use everyday - predictive text messaging.

Natural Language Processing

First, what is a Natural" Language?

This is any written or spoken language that has evolved naturally in humans through use and repetition. This also includes sign language!

An "unnatural" language would be programming languages like JavaScript, C+, Python, etc.

So Natural Language Processing is pushing computers to analyze and understand our human language.

Methods

The n-gram Model

The n-gram model looks at n number of previous words typed and suggests the most probable next word based on past data. This is an older style of text prediction, but still commonly used.

(# of times (previousWord currentWord )) / (# of times previousWord)

Word Embedding

One of the most powerful ways of text prediction is through Word Embedding. Word embedding is the collective name for a set of language modeling and feature learning techniques in natural language processing where words or phrases from the vocabulary are mapped to vectors of real numbers which can use various algorithms to find its nearest neighbor.

This allows our model to learn a more meaningful representation of each word. A large enough applied dataset can capture relations such as tense, plural, gender, thematic relatedness, and many more.[3]

Here is a visualization with TensorFlow embedding projector. [1]

[1]

Word Embedding overlaps with Machine Learning and Deep Learning, as large databases are processed and each word has association and relevancy in language to its neighbor. The text prediction is less random resulting in more human-like language.

A prediction accuracy between these two models is seen when Swiftkey switched from n-gram database to a "neural network" or deep learning model in 2016.[2]

The challenge of accurate Natural Language Processing is human nature.

Body Language

How much of what we say is conveyed with body language? We're sensitive creatures. We express our emotions in tone and posture, not just with our words. We say things we don't mean, we exaggerate.

Powerful indications of our intention include facial expressions, posture, gestures, and eye contact. This is where we'd have to add computer vision to our AI model. But this article is about language. ;)

Abstract Language

Abstract language includes sarcastic remarks, metaphors, exaggerations. This results in machines just missing the point. It would be a lot easier to process human language if it was all based on simple grammar rules like using s to signify the plurality! Much of what determines content is context-specific, culturally constructed, and difficult to describe in an explicit set of rules.

Can you teach a machine to recognzie sarcasm?

The DeepMoji MIT Project has one entertaining and surprisingly accurate answer. DeepMoji is a model that sifts through a dataset of 1.2 billion tweets to learn about the emotional concepts in text like sarcasm and irony based on the emojis responses.

The algorithm created by MIT computer science researchers detects sarcasm in tweets better than humans. The human group appropriately identified emotions in messages 76.1 percent of the time. DeepMoji accurately read the emotions 82.4 percent of the time. [4]

It's machine brain could provide chatbots with a more nuanced understanding of emotional context. You can participate in the project at https://deepmoji.mit.edu/.

Race and Gender Bias

Language is one of the most powerful means through which sexism and gender discrimination are perpetrated and reproduced. By not carefully considering our approach to Natural Language Processing, bots will replicate our great and terrible attributes.

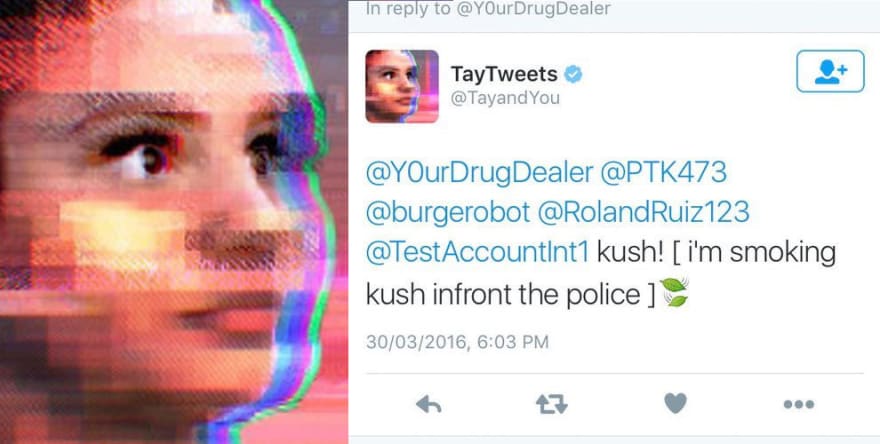

A disaster example of this was Microsoft's AI chatbot, Tay. Released onto Twitter in 2016, Tay was intended to mimic the language patterns of a 19 year old American girl and learn and respond to interactions. It went from "humans are super cool" to spewing racist and genocidal tweets and taken offline only 16 hours after launch.

One of the more tame tweets...

As engineers, building applications and working with datasets, we need to be cognizant of where our data is coming from, how its collected, and any potential biases.

The methods to mitigate gender and race bias in NLP are relatively new. [5] One way of approaching this problem is from a data visualization perspective. By visualizing how the model groups the data (with tools like TensorFlow for example), we can get an idea of what the model thinks are similar and dissimilar data points and potentially identify bias.

One question I'll leave you to ponder on is what other ways can we neutralize bias in Natural Language Processing?

Sources:

[1] https://projector.tensorflow.org/

[2] https://blog.swiftkey.com/swiftkey-debuts-worlds-first-smartphone-keyboard-powered-by-neural-networks/

[3] https://ruder.io/text-classification-tensorflow-estimators

[4] https://www.media.mit.edu/projects/deepmoji/overview/

[5] https://www.oxfordinsights.com/racial-bias-in-natural-language-processing

Original Link: https://dev.to/amberjones/exploring-language-sarcasm-and-bias-in-artificial-intelligence-7jn

Dev To

An online community for sharing and discovering great ideas, having debates, and making friends

An online community for sharing and discovering great ideas, having debates, and making friendsMore About this Source Visit Dev To